Biden Administration Pushes for Crypto Oversight Amid Hamas Worries

The Deputy Treasury Secretary urges the crypto industry to cease its involvement in facilitating terrorist financing.

Jixu

Jixu

Source: Billy Perrigo, Time Magazine; Translation: Jinse Finance xiaozou

Source: Billy Perrigo, Time Magazine; Translation: Jinse Finance xiaozou

Sam Altman is once again showing the future to the world. Standing on a nondescript stage in San Francisco, the OpenAI CEO is ready to unveil his next move to a rapt audience. "In the era of general artificial intelligence, we need some way to identify and verify humans," Altman explained, "We want to ensure that humans are always unique and core."

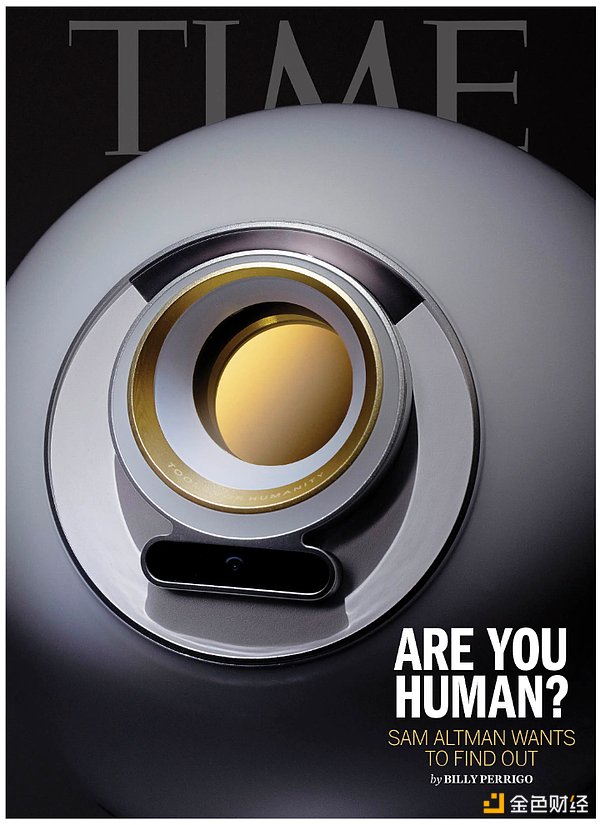

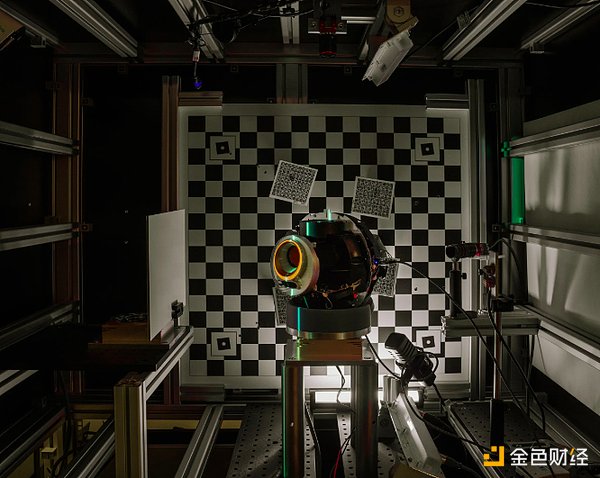

The solution is looming behind him - a white sphere the size of a beach ball with a camera embedded in the center. Its manufacturer, "Tools for Humanity," calls this mysterious device "Orb." As you gaze into the center of the plastic and silicone sphere, it scans the unique grooves and ciliary features of your iris. Seconds later, your phone app will receive an unalterable proof of your humanity: a 12,800-bit binary code for your iris. At the same time, about $42 worth of Worldcoin cryptocurrency will be transferred to your digital wallet - the reward for becoming a "verified human."

Altman co-founded Tools for Humanity in 2019 as part of a matrix of companies that are reshaping the world. He speculates that when the technology developed by OpenAI breaks through a certain intelligence threshold, the old era of the Internet will end and a new era will begin - when AI will be so realistic that it will be impossible to tell whether the content on the Internet is from real people. Altman foresees that at that time, we will need a new type of network infrastructure: a human verification layer for the Internet to distinguish between real people and the proliferating robots and AI "agents."

So Tools for Humanity set out to build a global "human proof" network. The project plans to complete the verification of 50 million people by the end of 2025, with the ultimate goal of registering every person on the planet. The free cryptocurrency is both an inducement to sign up and a gateway to the company’s creation of the world’s largest financial network, through which it predicts “double-digit percentages of the global economy” will flow. Even for Altman, these goals are bold. “If successful, this will become a fundamental part of the world’s infrastructure,” Altman told TIME in an in-car video interview a few days before his April 30 keynote.

The project is designed to solve a problem that Altman helped create. He and other tech leaders predict that in the near future, advanced AI will be autonomous: not only responding to human commands, but also taking independent actions in the real world. This will lead to AI colleagues who can directly come on board to solve problems, AI tutors who adjust their teaching methods to students' preferences, and even AI doctors who can diagnose routine cases and manage their schedules. Their venture capitalist backers predict that the arrival of these virtual agents will greatly improve productivity and usher in a new era of material abundance.

But AI agents will also have a cascading impact on the human experience of the Internet. "As AI systems become increasingly indistinguishable from humans, websites may face difficult trade-offs," said a recent paper published by researchers from 25 universities, nonprofits and technology companies including OpenAI. "Digital institutions are likely to be ill-prepared for situations such as when AI agents (including those operated by malicious actors) overwhelm other online activity." On social platforms such as X and Facebook, AI content generated by robot accounts has received billions of views. In April, the Wikipedia Operating Foundation revealed that AI robots crawling the website's content caused the encyclopedia's operating costs to surge to unsustainable levels. Later that month, researchers at the University of Zurich found that AI-generated comments in the Reddit forum /r/ChangeMyView were up to six times more successful than human comments in persuading users to change their positions.

The rise of AI agents threatens not only our ability to distinguish between real and AI content online, but also the internet’s core business model: online advertising, which is built on the assumption that the audience is human. “The internet is going to change dramatically in the next 12 to 24 months,” said Alex Blania, CEO of Tools for Humanity. “We have to succeed or we’re going to have unpredictable consequences.”

For four years, Blania’s team has been testing the Orb hardware overseas. Now the U.S. market launch is officially underway—7,500 Orbs will be deployed in gas stations, convenience stores, and brand flagship stores in dozens of U.S. cities, including Los Angeles, Austin, and Miami, over the next 12 months. The project’s founders and backers hope that this U.S. debut will kick off a new phase of growth, as the title of the San Francisco keynote, “It’s Finally Here,” implies.

But public enthusiasm doesn’t seem to match the brand’s impassioned vision. Tools for Humanity has “verified” only 12 million humans since mid-2023, a pace Blania admits is far behind schedule. There are currently very few online platforms that support the so-called "World ID" issued by Orb, and apart from free cryptocurrency, there is almost no way to attract users to submit biometric data. Even Altman is skeptical about the project's prospects: "Maybe this will become mainstream in a few years, or it may always be used by a niche group of people with a certain way of thinking."

However, as AI sweeps the Internet, the creators of this strange hardware are betting that humans around the world will soon visit Orb actively or passively. They predict that the biometric code it generates will become a new type of digital passport. Without it, you may be blocked from the future Internet - from dating apps to government services. In the best-case scenario, World ID could be a privacy-preserving solution that helps the internet fend off a flood of AI-driven misinformation, or it could be a way to achieve the kind of universal basic income that Altman once pushed for as AI automation reshapes the global economy. To explore the implications of this technology, I reported across three continents, interviewed 10 Tools for Humanity executives and investors, reviewed hundreds of pages of company documents, and completed my own "human verification."

"The internet will inevitably need some kind of human verification system in the near future," said Divya Siddarth, founder of the nonprofit Collective Intelligence Project. The key, she said, is whether such a system will be centralized - "a major security risk that facilitates mass surveillance" - or will be as private and secure as Orb claims. There are still questions about Tools for Humanity's corporate structure, its ties to volatile cryptocurrencies, and how power will be concentrated in the hands of owners if it succeeds. But it is one of the few attempts to address what is widely seen as an increasingly urgent problem. "World ID does have some issues," Siddas said, "but the internet can't stay the same forever. It's necessary to try to move in this direction."

I met Brania at Tools for Humanity's San Francisco headquarters in March, where a large screen displayed the number of "Orb verifications" per week in various countries in real time. A few days ago, the CEO had attended a million-dollar dinner held at Mar-a-Lago and met with President Trump. He believes that it was Trump's policy of relaxing cryptocurrency regulations that cleared the way for the company to enter the US market. "Given that Sam (Altman) was a high-profile target," Brania explained, "we decided to let other companies deal with regulatory disputes first and enter the US market when the situation is clear."

As a child in Germany, Brania stood out. "Other kids were either addicted to drinking or partying, and I was always tinkering with things that might explode," he recalled. While studying for a master's degree at Caltech, he often stayed up all night reading the blogs of entrepreneurial gurus such as Paul Graham and Altman. In 2019, Brania received an email from entrepreneur Max Novendstern, who was discussing the idea of building a global cryptocurrency network with Altman, and they were looking for technical talent to advance the project.

Over a cappuccino, Altman explained to Brania three things he was sure of. First, AI that surpasses human intelligence is not only possible, but inevitable - which will soon make it impossible to assume that everything you see on the Internet is from human hands. Second, cryptocurrency and other decentralized technologies will become a huge force for changing the world. Third, large-scale adoption is crucial to the value of any crypto network.

The project, originally called Worldcoin, aims to integrate these three insights. Altman took a page from PayPal, which he co-founded with mentor Peter Thiel. PayPal spent less than $10 million of its initial funding on developing its app, but poured about $70 million into a referral program that gave new users and referrals a $10 credit each. The referral program helped PayPal become a leading payments platform. Altman thought a similar strategy could propel Worldcoin to similar heights. He planned to create a new cryptocurrency that would be rewarded for signing up. In theory, the more people joined the system, the more valuable the token would be.

Since 2019, the project has raised $244 million from investors including Coinbase and venture capital firm Andreessen Horowitz. The funds covered the $50 million cost of designing the Orb device and the software needed to keep it running. The total market value of all Worldcoin in existence, however, is much higher — about $1.2 billion. That number is somewhat misleading: Most of the tokens are not yet in circulation, and Worldcoin prices fluctuate wildly. Still, it allows the company to reward signups at zero cost. For investors, the main attraction is the cryptocurrency’s potential to appreciate in value. About 75% of Worldcoin is reserved for users to claim when they sign up or as a referral reward, and the remaining 25% is distributed by Tools for Humanity supporters and employees (including Brania and Altman). "I'm looking forward to making a lot of money," Brania said.

From the beginning, Altman has been thinking about the possible consequences of the AI revolution he intends to launch. (On May 21, he announced plans to work with former Apple designer Jony Ive to develop new AI personal devices.) He reasoned that when advanced AI can complete most tasks more efficiently than humans, unemployment and economic dislocation will follow, and some form of wealth redistribution may be imperative. In 2016, he partially funded a basic income study to distribute $1,000 per month to low-income groups in Illinois and Texas. But at that time, there was no unified financial system worldwide that could distribute funds to everyone, nor could it prevent individuals from claiming shares repeatedly, and it was even more difficult to identify advanced AI that disguised itself as humans to steal funds. In 2023, Tools for Humanity proposes to use the network to redistribute profits from AI labs that automate human labor. "As AI grows," the company statement states, "equitable distribution of access and a portion of the value created through a universal basic income will play an increasingly critical role in curbing the concentration of economic power."

Brany was moved by the idea and agreed to join the project as a co-founder. "Most people thought we were either stupid or crazy, including Silicon Valley investors," Branya recalls. This view did not change until ChatGPT was launched in 2022 - the product made OpenAI one of the most famous technology companies in the world and triggered a market bull run. "The outside world suddenly began to understand our vision," Branya said of building a global "human proof" network. "You have to imagine a world where highly intelligent systems shuttle through the Internet, each with different goals and intentions, and humans have no idea what they are facing."

After the interview, the public relations director led me to a circular wooden installation with eight Orb devices standing opposite each other, the scene like an Apple Store combined with a religious altar. "Would you like to try it out?" she asked. For research purposes, I put aside my concerns and downloaded the World App and followed the instructions: I showed the QR code to the Orb and stared at the center of the device. About a minute later, my phone vibrated to confirm that I had obtained my own World ID and some Worldcoin.

As I stared at the Orb, a number of complex processes were initiated simultaneously. The neural network confirmed that I was alive through multiple sensors such as an infrared camera and a thermometer, while the telephoto lens focused on my iris, capturing the biological features that distinguished me from other humans on the planet. The device then converted the image into an iris code - an abstract set of numbers that represent my unique biological features. The Orb used encrypted data comparison technology (without exposing the original information) to verify whether the code was a duplicate of existing records. Before deleting the data, the system converted my iris code into several derivative codes (none of which can be reversed to the original data), encrypted them, destroyed the unique decryption key, and distributed them to different secure servers so that future users' iris codes can be uniquely compared with mine. If I use World ID to log in to the website, the other party can only confirm that I am human and cannot obtain other information. Because Orb is open source, outside experts can review the code to verify its privacy commitment. "I did a full colonoscopy of this technology before joining," said Trevor Traina, a Trump donor and former US ambassador to Austria, who is now the chief business officer of Tools for Humanity. "This is the most privacy-protecting technology on the planet."

Just a few weeks later, when I was researching the data deletion mechanism, I found that the company's privacy commitment was actually based on a kind of "smoke and mirrors". Tools for Humanity claims that the derived iris code has "virtually anonymized" biometric data. If you request deletion, the company only clears the original code stored on the phone and retains the derivative code - they believe that these derivative codes are no longer personal data. But if I delete my data and use the Orb again, the system can still identify me uniquely through these derivative codes. Once you gaze into the Orb, fragments of your identity will be stored in the system forever.

When I called Damien Kieran, the chief privacy officer of Tools for Humanity, for clarification, he admitted that if users were allowed to completely delete their data, the core premise of "one person, one ID" would collapse. A banned user might delete his data and then re-register for a World ID, or collect Worldcoin tokens to sell, then delete his data and cash out again.

This argument did not convince Germany's EU regulator, which recently ruled that Orb had "fundamental data protection issues" and required the company to allow European users to completely delete all information, including anonymized data (Tools for Humanity has appealed, and regulators are reevaluating the decision). "As with other technology services, users cannot delete non-personal data," Kieran stressed in a statement. "If these anonymous data, which cannot be linked to individuals by World or third parties, are allowed to be deleted, it will allow criminals to bypass the security line that World ID has built for all of humanity."

One warm afternoon this spring, I climbed up to a room above a restaurant in the far suburbs of Seoul. Five elderly Koreans were tapping their phones, waiting to be "verified" by two Orbs in the center of the room. "We really can't tell AI from humans now," a staff member wearing a company T-shirt explained in Korean, pointing to the sphere device. "We need a way to prove that we are humans and not AI. How can we do it? Humans have irises, but AI doesn't."

The staff member guided an elderly woman to the Orb. After the device beeped, a female voice said in English: "Open your eyes." The elderly woman stared at the camera, and a few seconds later checked her phone and found that 75,000 won (about $54) worth of Worldcoin had been deposited into her digital wallet. The app popped up a congratulatory message: You have become a verified human.

Since 2023, South Korea has deployed more than two dozen Orb devices and completed verification of about 55,000 people. Now Tools for Humanity is stepping up its promotion in South Korea. At a launch event held in a traditional Korean house in downtown Seoul, company executives announced that 250 Orbs will soon be deployed across the country - with the goal of completing verification of 1 million Koreans in the next 12 months. South Korea has a high penetration rate of smartphones, widespread application of cryptocurrencies and AI, perfect network coverage, and a per capita income level that makes free Worldcoin still attractive. These conditions make it an ideal testing ground for the company's global expansion plans. But the promotion seems to have started off badly. At a retail store in downtown Seoul, Tools for Humanity has set up eight wooden Orb installations facing each other. Most of the locals and tourists passing by are confused and rarely try them out. Most of the participants are cryptocurrency enthusiasts who come here specifically for the experience rather than the free tokens.

The next day, I went to a cafe in downtown Seoul, where a silver Orb device was quietly placed in the corner. A 20-year-old Chinese student chatted with the barista who was also the operator. He was invited by a friend who said that both parties could receive free cryptocurrency after registration. The barista quickly guided him through the process: he agreed without reading the privacy terms, opened his eyes wide at the Orb, and was quickly verified. "No one explained the privacy policy," he said when he left, "I just came for the money."

As Ultraman's car drove through the streets of San Francisco, I mentioned his point in 2019: AI will weaken online trust. Unexpectedly, he denied this statement. "I'm more interested in what value we can create than what harm we can prevent," he said. "It's not about 'preventing robots from spreading', it's about creating unique value for humans."

The answer perhaps reflects his changing role. Now the chief public spokesperson for a company valued at $300 billion, Altman is touting the transformative applications of AI agents. He and other supporters believe that the rise of agents will improve the quality of life - like having an assistant ready to answer urgent questions, handle trivial matters and help you master new skills. This optimistic vision may well come true, but it doesn't quite match the "AI-induced information disaster" predicted when Tools for Humanity was founded.

Altman was not concerned about the influence he and his investors would have if the vision came true. He speculated that most holders would sell their tokens too early. "The bad thing is that the early team controls the protocol," he said. "That's the valuable promise of decentralization." Altman is referring to the World Protocol, the underlying technology that Orb devices, Worldcoin and World ID all rely on. Although developed by Tools for Humanity, the company promises to gradually hand over control to users — a process they say prevents power from being concentrated in the hands of a few executives or investors. Tools for Humanity will remain for-profit and can charge fees to platforms that use World ID, but other companies can compete for users by developing alternative apps or even competing Orbs.

The plan draws on core ideas from the crypto ecosystem in the late 2010s and early 2020s, when evangelists of emerging blockchain technology believed that concentrated power (especially the monopoly of so-called "Web 2.0" tech giants) was the root of many of the ills of the modern internet. Just as decentralized cryptocurrencies can reform a financial system controlled by economic elites, so too can decentralized organizations run by members rather than CEOs. But how such a system would actually work remains unclear. "Building a community-governed system," Tools for Humanity admits in its 2023 white paper, "may be the most challenging part of the entire project."

Ultraman has a pattern of "making idealistic promises first and then gradually revising them." In 2015, he founded the nonprofit OpenAI, whose mission is to safely develop general artificial intelligence that benefits all of humanity. To raise funds, OpenAI reorganized into a for-profit company in 2019, but control remains with the nonprofit board. Last year, Altman again proposed a restructuring plan that would weaken the board's control and allow more profits to flow to shareholders. When I questioned why the public should trust Tools for Humanity to voluntarily give up power, he responded: "The protocol will continue to promote decentralization, and value will exist in the network, which will be owned and governed by the public."

Altman has mentioned the concept of universal basic income less recently, and instead explored an alternative he calls "universal basic computing power." He seems to imply that instead of having AI companies distribute profits, it would be better to give the global public fair access to super AI. Brania revealed that he has decided to "stop discussing universal basic income" within Tools for Humanity. "Universal basic income is just one potential solution," he said. "Allowing people to access the latest AI models and accelerate learning is another path." Altman admitted: "I'm still not sure about the right answer. But the existing resource allocation mechanism must be improved."

When I asked "Why should the public trust him?", Altman looked annoyed. "I understand that you dislike AI, and that's okay," he said. "If you insist on emphasizing the disadvantages of AI - for example, there will be a large number of realistic AI systems disguised as humans, and we need a way to distinguish between the real and the fake - then you can indeed call it the disadvantages of AI, but that's not what I want to say."

The wording of "human authorization" implies the tension between World ID and OpenAI's AI agent plan. If the Internet requires World ID to use most services in the future, it may hinder the effectiveness of AI agents developed by companies such as OpenAI. For this reason, Tools for Humanity is building an agent delegation system. According to Chief Product Officer Tiago Sada, users can authorize World ID to agents and allow them to operate online on their behalf. "The entire system we designed can be easily delegated to agents," said Sada. While this measure allows humans to be responsible for AI behavior, it shows that the mission of Tools for Humanity may be shifting from simply "verifying humans" to becoming an infrastructure for "AI agents to spread with human authorization." World ID cannot distinguish whether the content is generated by AI or humans, and can only show the attributes of the account that posted it. Even if everyone has a World ID, the cyberspace may still be flooded with AI-generated pictures, texts, and videos.

When I said goodbye to Ultraman, I felt conflicted about the project. If AI agents are going to reshape the Internet, some kind of human certification system is indeed necessary. But if Orb becomes the network infrastructure, it will allow Ultraman - a vested interest in the proliferation of AI content - to also control the main defense mechanism against the proliferation of AI. People may have to join the network to use social media or online services.

This reminds me of the scene I witnessed in Seoul. In a room upstairs from the restaurant, 75-year-old Zhao Zhengyan (sound) watched her friends complete the Orb verification. Although invited to participate, she chose to refuse - the reward was not enough for her to hand over part of her identity. "The iris is unique, and we have no idea how it will be used," she said. "Seeing that machine made me wonder: Are we now becoming machines instead of humans? Everything is changing, and we don't know where it will end up."

The Deputy Treasury Secretary urges the crypto industry to cease its involvement in facilitating terrorist financing.

Jixu

JixuThe Layer 1 blockchain network supported by Kakao intends to investigate tokenization methods in collaboration with a shipping division of Korindo, an Indonesian conglomerate.

Jixu

JixuSouth Korean authorities have apprehended 49 individuals and frozen £12 million in assets in connection with an alleged international cryptocurrency fraud ring. The group is accused of running a fake cryptocurrency investment platform that attracted investors from abroad, resulting in losses of over £11.5 million for at least 253 victims, highlighting ongoing concerns about cryptocurrency fraud in South Korea.

Jixu

JixuAccording to a report by OxScope, Binance currently dominates with over 50% of the total cryptocurrency trading volume on centralized exchanges. However, their spot trading share decreased from 62% a year ago to 40% by October 2023, while their derivatives market share remained around 50% throughout much of the year.

Jixu

JixuIn the most recent incident, a deceitful Xumm plugin was discovered lurking within the Chrome web store, prompting a swift and coordinated response from the vigilant XRPL community.

Joy

JoyMeta, Facebook's parent company, tackles AI misuse in political ads, marking a significant move in tech regulation.

Hui Xin

Hui XinBinance addresses and dispels rumours surrounding the alleged release of an exclusive invite-only messenger application.

Catherine

CatherineThe ministry says that Coinbase does not have a national licence to issue or trade digital currencies.

Alex

AlexBinance unveiled a secure Web3 wallet, employing advanced security measures, as part of its broader expansion into crypto services.

Hui Xin

Hui XinThe new T-wallet will be the result of a collaboration between Aptos Labs, Atomrigs Labs, and SK Telecom.

Alex

Alex