Author: DeepSafe Research

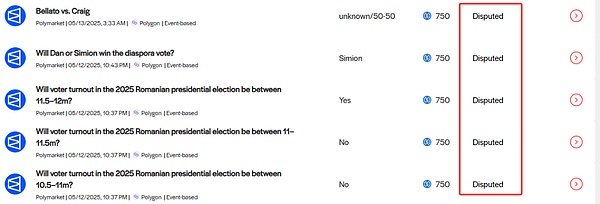

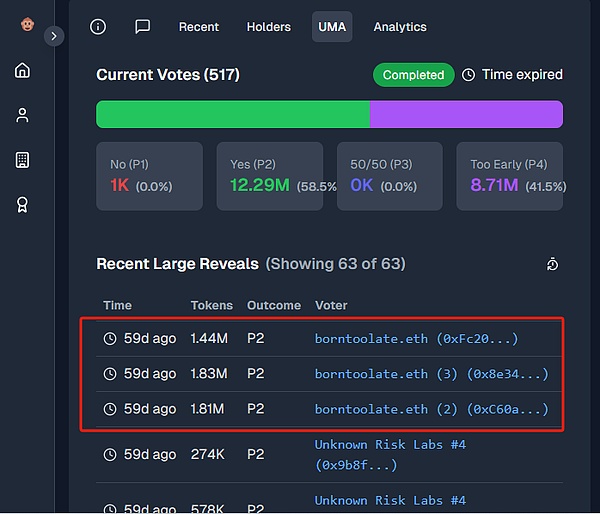

On March 25, 2025, a piece of news about PolyMarket being manipulated by an oracle attracted widespread attention.Previously, the platform's betting pool on whether Ukraine would sign a mineral agreement with Trump before April attracted about $7 million in bets. As of the eve of March 25, the so-called mineral agreement had not been signed. However, a whale manipulated the UMA oracle connected to PolyMarket, causing the contract to determine that the mineral agreement had been signed, causing many people to suffer losses. After the fermentation of public opinion, this incident was reported by many people and sparked heated discussions. In this typical case of oracle manipulation attack, since the UMA oracle allows coin holders to vote on the results of real events, if more tokens are voted for "Yes", the oracle will determine that this event has occurred. Whales holding a huge amount of UMA tokens cast a total of about 5 million UMA tokens through multiple addresses on March 25 to force the "Yes" result to pass the judgment, which can be described as turning black into white.

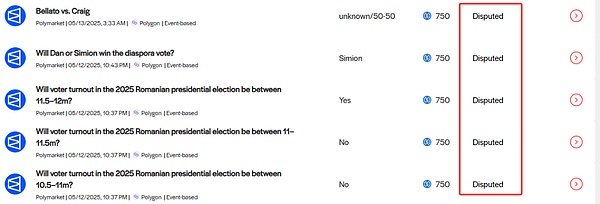

It is worth noting thatthis is not the first time that PolyMarket has had a similar oracle manipulation case.It had previously misjudged the incumbent president of Venezuela as Edmundo González, and misjudged that the Ethereum ETF would be approved before May 31, 2024. The Ukrainian mining agreement betting result was the most influential.

After the mining agreement misjudgment case, although stakeholders expressed strong dissatisfaction, Polymarket officials responded on Discord that this incident was not caused by PolyMarket's system technical failure, so it would not refund the affected users, and only promised to work with the UMA team to optimize the mechanism design and strengthen risk control. This response was considered by many to be "inaction", and it also made people doubt the reliability of UMA.

Unlike Chainlink and other oracles for quantitative data, UMA is mainly used to push results for any type of off-chain events, many of which are real events that cannot be quantified and can only be qualitative, such as whether the mining agreement mentioned above has been signed. To determine such complex events, not only reliable information sources are required, but also the corresponding content must be understood and reasoned, which is obviously impossible for traditional oracles. UMA directly determines complex events through human intervention. Although this simple and crude method is effective, it has huge room for evil.

Currently, Story Protocol, PolyMarket, Across, Snapshot, Sherlock and other major projects use UMA oracles as important data sources. Once they are attacked again, the losses involved will be considerable. However, if we want to use technical means to solve the problem of UMA, how should we do it?

Some people have believed that UMA's punishment for malicious voters is too light. If the punishment is increased, malicious voting can be prevented, but this idea is to transfer power to the so-called "punisher", and the punishment process may be more centralized than the gambling vote mentioned in the previous article, which is essentially to attract tigers and drive away wolves.

In the real world, the balance of power between local and central governments and the mechanism for punishing evil are far from satisfactory. In Web3, where corruption and shady dealings are everywhere, we cannot place high hopes on "rule by man". Relying on rule by man to solve the problems of rule by man itself can easily lead to "bootstrapping" (lifting oneself up). Only through reasonable technical means can we find the right way out.

In this article, DeepSafe Research analyzes the contract code of the UMA oracle and proposes its problems in combination with its relevant documents. At the same time, we have conceived a solution that combines AI Agent with DeepSafe's existing technologies, trying to use large language models to judge complex events in reality.

We believe that as long as the optimistic/challenging mode is combined with the powerful capabilities of AI Agent, it is entirely possible to create a new generation of intelligent oracles. If this facility that can verify and push any off-chain message is successfully put into production, it is very likely to achieve efficient interaction between smart contracts and the off-chain world, opening up unprecedented scenarios and broad markets.

UMA optimistic oracle workflow and code analysis

In order to better understand the implementation ideas of intelligent oracles, let us first have an in-depth discussion on the UMA oracle. The full name of UMA is Universal Market Access, which provides DAPP with data of any event through an oracle service called “Optimistic Oracle”. The brief principle is as follows:

If you want UMA to determine whether Trump is elected president, you can post a question/request and offer a bonus as a reward, and then the "assertor/proposer" and "questioner" will dispute the answer to the question.

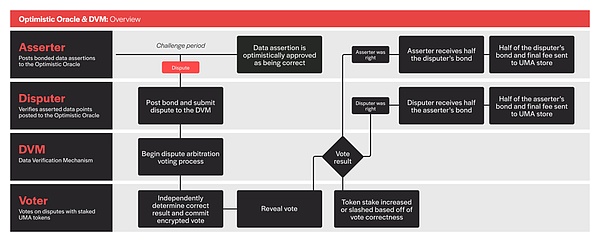

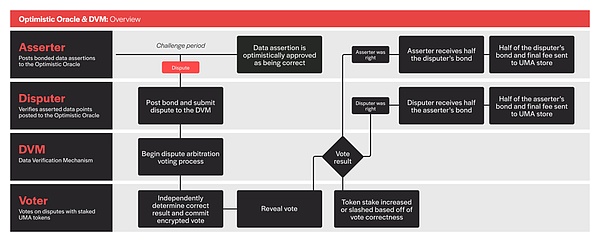

UMA has set up an arbitration module called DVM. After the asserter/proposer publishes the answer, the questioner can raise a question, and then a voting decision will be made, allowing UMA coin holders to decide who is right and who is wrong. If the voting decision believes that the asserter has done evil, the system will punish him and reward the questioner, and vice versa. Correct voters can also receive rewards.

(Schematic diagram of the workflow of UMA Optimistic Oracle V3)

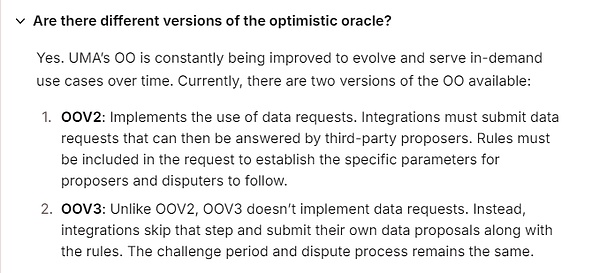

In the above mode, the oracle will optimistically assume that the asserter/proposer is honest, and punish if it is dishonest, so this mode is called "optimistic oracle". The above figure is the workflow of the optimistic oracle V3 version, which is also the main version mentioned in various online materials.

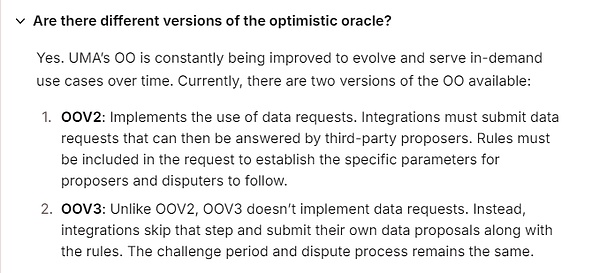

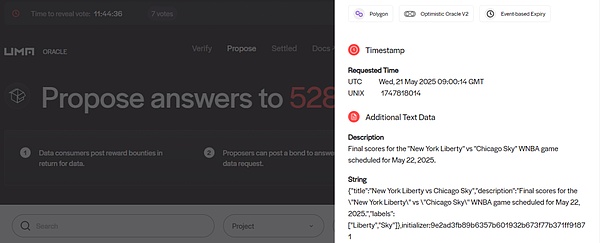

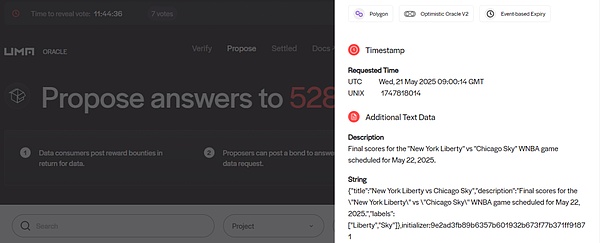

CurrentlyPolyMarket uses the earlier Optimistic Oracle (OO) V2 version. Although OOV2 and OOV3 share the same principle, there are many differences in implementation details. Since PolyMarket uses OOV2, we will mainly analyze the implementation details of V2 below.

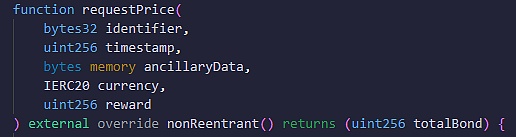

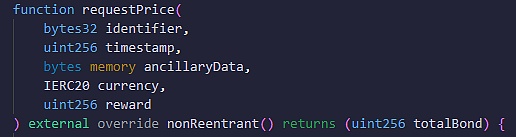

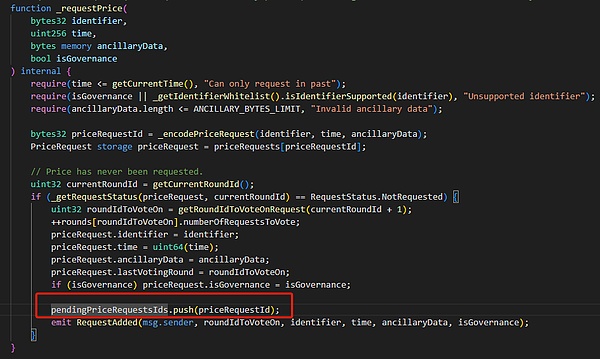

In OOV2, if you want the oracle to answer a question, you must first call the requestPrice() function to give the relevant parameters. After the question is published, let the third-party proposer give the answer and then enter the challenge period. If the challenge period ends without anyone questioning it, the proposer's answer is assumed to be correct.

In the V3 version, the person who publishes the question will also act as the proposer, using the "self-questioning and self-answering" method to send out the question and answer at the same time, and directly enter the challenge period.

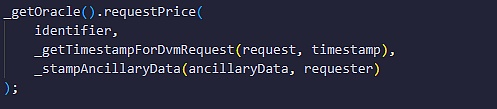

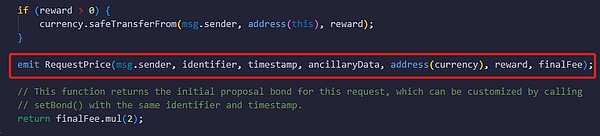

As mentioned earlier, in OOV2, users must first call the requsetPrice() function to provide question information, among which the most important parameters include identifier and ancillaryData.

identifier is used to declare the type of question. Typical binary questions can use "YES_OR_NO_QUERY" as the identifier, and quantitative data questions use other identifiers. The auxiliary information in ancillaryData will detail the question content in natural language, such as "Will Trump be elected as the US president in 2024?"

In addition to the identifier and auxiliary information, you must also declare the timestamp when the question was submitted, the reward tokens you are willing to pay, the amount of the reward, etc. These parameters will be submitted to the oracle contract together.

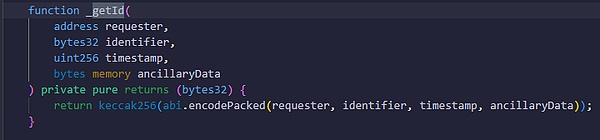

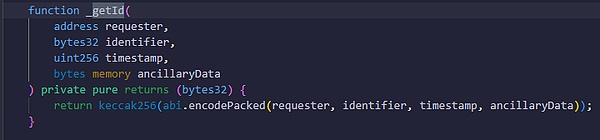

After receiving the above data, the OOV2 contract will call the _getId() function and use the four parameters of "questioner address, identifier, auxiliary information, and timestamp" to calculate a hash value as the ID number of the question and record it on the chain.

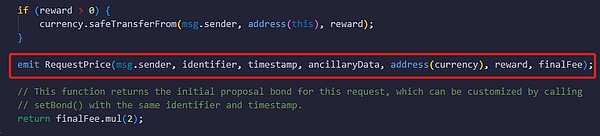

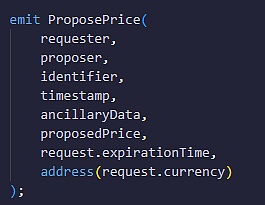

Then the contract will throw an event named RequestPrice to let the listeners off the chain know that someone has posted a new question request. Currently, UMA officials also have a matching front-end UI to display these questions waiting for answers.

Proposer submits the answer

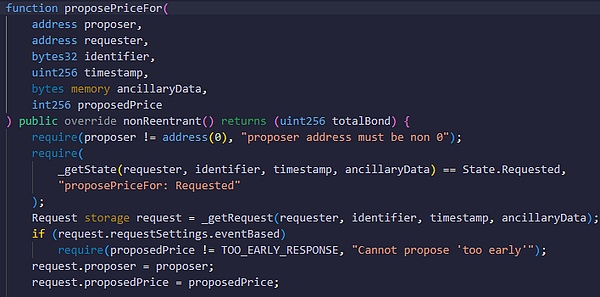

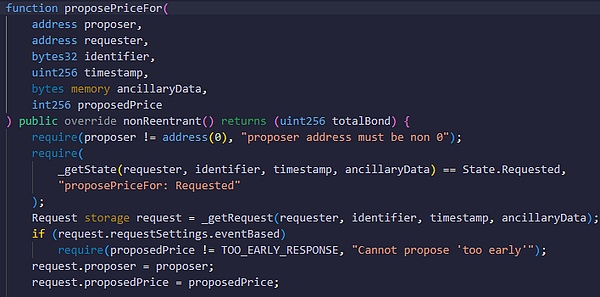

If a question is posted and no one gives an answerat this timeanyone

text="">You can call proposePriceFor() or proposePrice() function to give the answer, and the answer will be stored on the chain according to the corresponding question ID number. It should be noted here that the proposer who publishes the answer must pledge a deposit, and a question will only receive the answer of the first proposer, and then immediately change to the Proposed state (proposed). If other proposers are slower, their answers will be rejected.

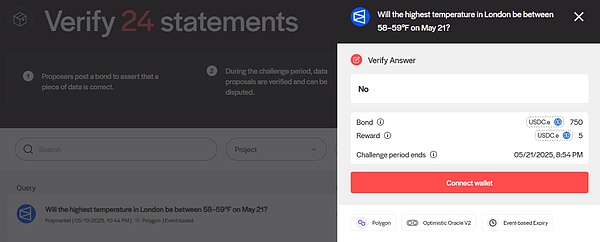

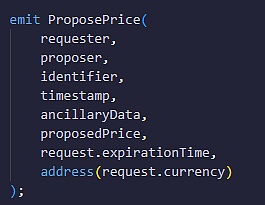

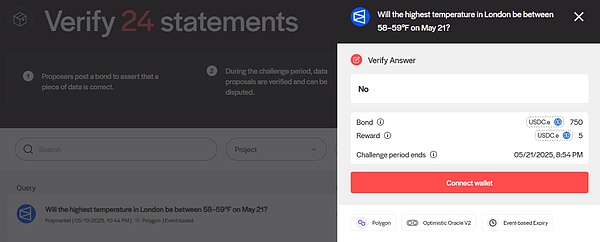

Then the OOV2 contract will throw an on-chain event named ProposePrice to inform the outside world that a proposer has submitted an answer to a question. The UMA official UI will classify the question into the "Verify" column, publicize the detailed information of the question and attract community members to check the answer.

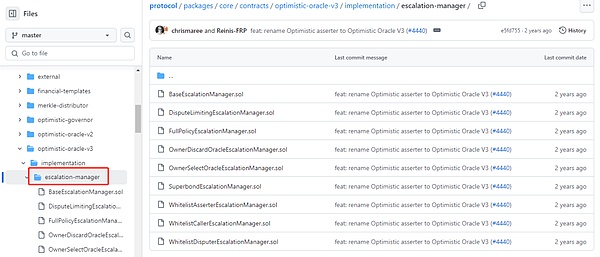

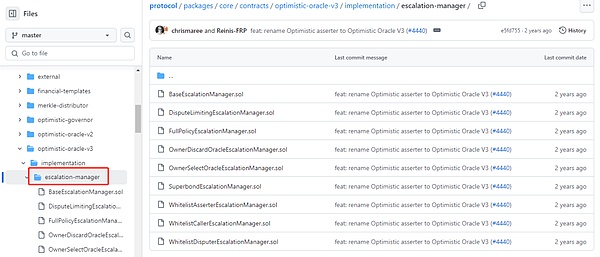

The difference is that OOV2 adopts a permissionless system of "anyone can submit answers" and "anyone can question", while the OOV3 version adds the Escalation Managers module, which allows project parties to pre-set whitelists and switch to a permissioned system.

Disputer questions the answer

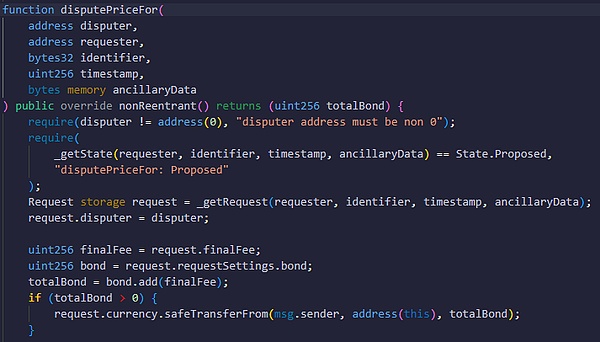

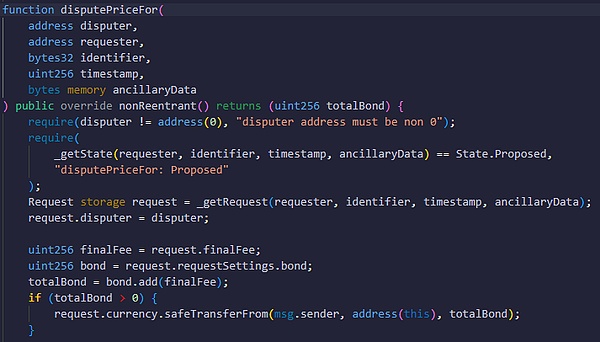

Let's continue to interpret the workflow of OOV2. If someone disagrees with the answer to a question, they can call the disputePriceFor() function to raise a question, and then the question will switch to the disputed state (being questioned). The questioner must pledge a deposit, and if the subsequent voting verdict believes that the questioner is wrong, he will be punished.

It should be noted that a question can only be questioned once, that is, only the first questioner's action will succeed.

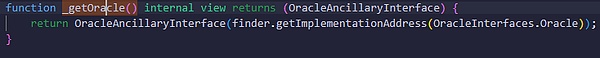

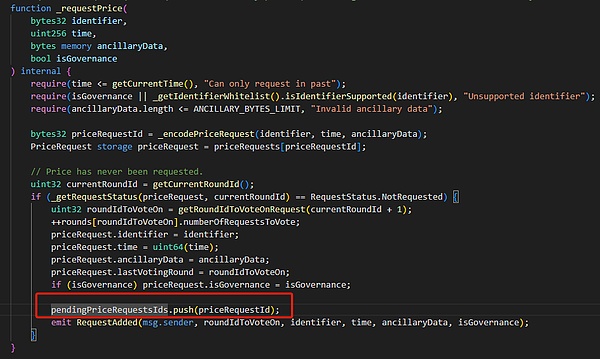

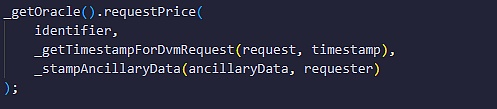

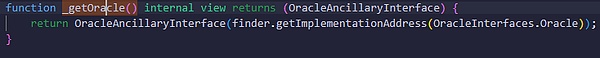

After that, the contract will transfer the controversial issue to the DVM arbitration module, call a function named requestPrice(), pass the issue information to VotingV2, the main contract of the DVM module, and start the voting and judgment process.

DVM arbitration module and “Commit-Reveal” privacy voting

The VotingV2 contract will insert the issues waiting for voting decisions into the PendingPriceRequsetIds queue, and then the contract will throw an event to inform the outside world that there are new issues that need to be voted on. After that, the UMA official UI will be announced to the public, pointing out that the answer to the question has been questioned and needs to be voted on.

According to the official requirements of UMA, voters must first pledge UMA tokens and then vote on the issues to be resolved. The APY that UMA pledgers can obtain is currently up to about 20%. UMA will continue to issue additional tokens to maintain high APY and attract users to pledge tokens and participate in voting.

To prevent the stakers from inaction, if the stakers do not vote, 0.1% of the staked tokens will be confiscated for each question missed, and 0.1% will be confiscated for not matching the final answer (it used to be 0.05%, and the penalty ratio will be raised to 0.1% in the second half of 2024).

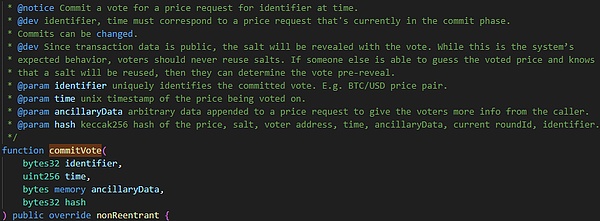

And in order to prevent someone from deliberately influencing others' voting tendencies, UMA adopts a two-stage "Commit-Reveal" privacy voting system. In the Commit stage, the voter will submit a hash to the chain without directly exposing his or her voting intention. After the Commit stage, the voting stops and enters the Reveal stage, at which time the voter will decrypt his or her voting information to prove that the information is associated with the previously submitted hash.

In other words, you cannot directly see other people's voting intentions when voting, and you can only see them after the voting window ends.

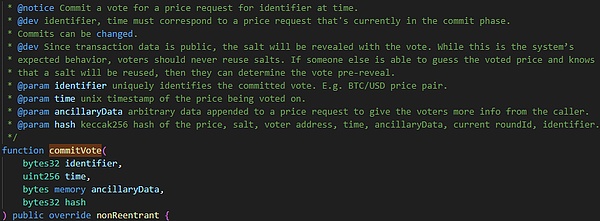

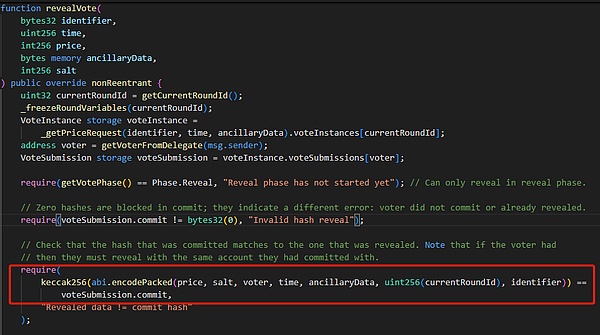

According to the UMA code, In the Commit phase, voters must upload the hash to the chain through the commitVote() function. This hash is generated off-chain through the keccak256 algorithm, and the input contains a parameter called price, which is the answer to the question that you think is correct.

In binary qualitative scenarios, price can have two possibilities: True/False, while in price feeding and other scenarios, price can be a number.

In addition, the input for generating hash values also contains an off-chain random number salt, which can prevent others from observing the hash value and then deducing your input based on the generation algorithm. As long as others cannot infer your input in the Commit phase, they will not know what your price is.

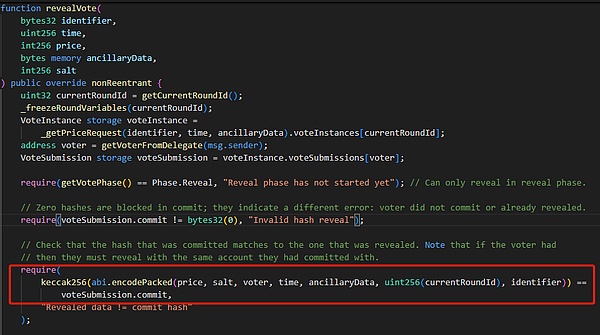

In the subsequent Reveal phase, the voter needs to call the revealVote function () and give the input used to generate the hash one by one, including the voting information price. The contract will verify whether your input corresponds to the hash you submitted before. If it passes the verification, the vote will be counted.

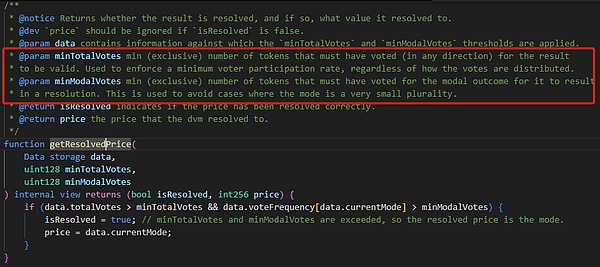

After the Reveal window period closes, the vote counting will end automatically, Logically speaking, the entire workflow is also nearing completion. However, it should be noted that the smart contract in the EVM system cannot take the initiative to initiate actions. It must be called by an external force. Therefore, in the final stage, someone needs to call the settle() and settleAndGetPrice() functions to change the question posted by the Requester to the "settled" status, and the answer to the question will be returned to the function caller. Of course, before the settlement, the smart contract will determine the correct answer based on the counting results on the chain. As mentioned earlier, the Voter will submit a price in the voting judgment stage, and this price is customized. The smart contract will count which price has the most votes, and then use this price as the valid answer.

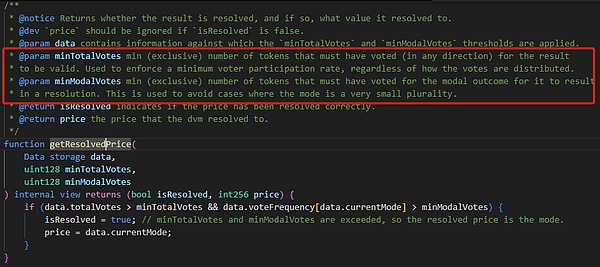

When counting votes, simply count the amount of tokens staked by voters.One UMA token counts as one vote, and enough votes must be collected (the current default condition is that the total number of participating votes exceeds 5 million, and more than 65% of the staked UMA support the same answer).

Then the contract can reward or punish Proposer/Disputer, and Voters based on the voting results. This requires someone to call the updateTrackers() function. Generally, there will be a dedicated Keeper node to trigger this operation.

In addition, if no one questions the Proposer, there is no need to vote, and the OOV2 contract can take on the entire process from question posting to finalizing the answer without the intervention of the VotingV2 contract. This is the simplicity of UMA's design.

What are the problems with UMA?

1. UMA's security model is obviously inconsistent with its official statement

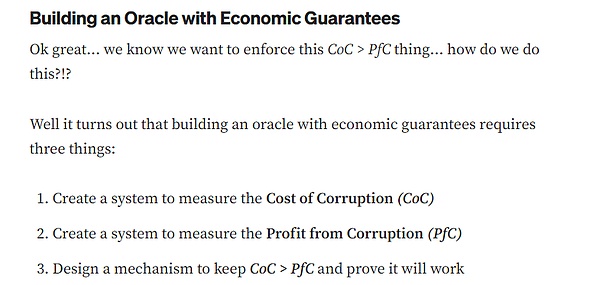

In the UMA white paper and Medium document, UMA officials once claimed thatthe premise of security is the cost of doing evil > the profit of doing evil,and gave such an example:

Assuming that the total profit of a voting manipulation attack is $100 million, to ensure security, the cost of obtaining 51% of the votes must be kept above $100 million for a long time. However, according to the actual situation, the UMA token has reached more than 40 US dollars at its highest, and the current price is only 1.3 US dollars, a drop of more than 95%. The project party obviously did not carry out effective protection. Secondly, since UMA's punishment for wrong voting is extremely low (the current fine is only 0.1%), voters can always submit results that are inconsistent with the facts. If the attack is successful, the coins can be sold afterwards, so the actual cost of doing evil does not follow the aforementioned security model. In theory, attackers can win over voters through means similar to leasing (similar to launching a 51% attack on Bitcoin by bribing or leasing mining machines) without directly buying coins. The cost of obtaining more than 50% of the pledged tokens is definitely much lower than the official estimate of UMA.

Furthermore, according to data from May 2025, UMA officials raised the voting threshold to 65%, and there are less than 24 million UMAs in the pledged voting state. An attacker can manipulate the oracle by controlling about 15.6 million tokens. Based on the current UMA unit price of 1.3, these tokens are worth about 20 million US dollars.

According to the self-promotion of the UMA official website,UMA oracle has a total of more than 1.4 billion US dollars in assets on multiple platforms connected. Obviously, the potential profit from doing evil is much greater than the cost of doing evil.

2. UMA's privacy voting has limited effect

UMA's "Commit-Reveal two-stage privacy voting" copied the idea of a man named Austin Thomas Griffith on Gitcoin. In theory, this method will encrypt during voting and decrypt after the voting, which can indeed protect privacy, but UMA has added a "Roll" mechanism to prevent insufficient votes.

If the number of votes collected in this round is insufficient, another round of voting will be added. At this time, new voters can see the decrypted voting results of the previous round, and there is a clear space for malicious behavior.

Here we can imagine a scenario: Assuming that the current total number of staked UMAs is 20 million, an answer can be finalized with 10 million votes (1 staked UMA is 1 vote), and the question is "Will Musk be assassinated in 2025?"

After the first round of voting, 8 million votes for yes and 8 million votes for no. At this time, no answer meets the threshold of 10 million votes, and it automatically enters the second round of voting. If the remaining 4 million votes are controlled by whales, they can vote for any answer out of their own interests, rather than out of respect for the facts.

Obviously, the premise for privacy voting to take effect is that enough votes are collected in the first round of voting. If this requirement cannot be met, there is no privacy protection.

In addition, Although the intentions of others cannot be observed on the chain during the first round of voting, the manipulator can announce on social media that a large number of tokens will be voted for a certain result, fanning the flames at the community sentiment level, or colluding with other big players in private.

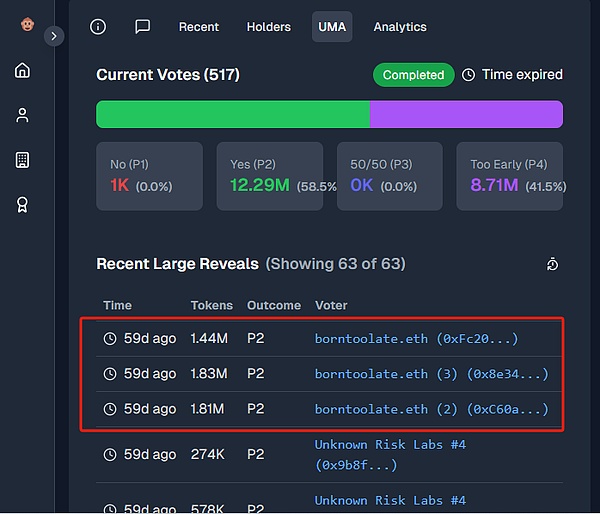

In the Ukrainian mining farm agreement betting case, although the media generally believed that a whale named borntoolate used 5 million UMA (about 25% of the pledged UMA) to decide the final answer, in fact, there may be a private conspiracy behind it. Therefore, UMA's privacy voting is only a temporary solution and cannot effectively fight against evil.

And if the authorities intervene to punish malicious voters, they will only sink deeper and deeper into the vortex of self-bootstrapping. At the beginning of this article, we pointed out that if malicious voters are punished by human intervention, it is to solve the problem of human rule by human rule. This involves a lot of power sources and distribution dilemmas, and it is better to give up directly in the end.

Solution: Replace humans with AI Agent

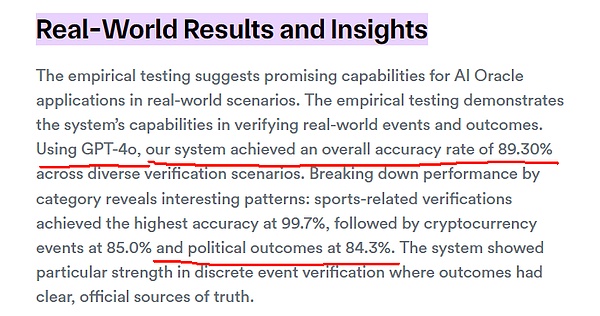

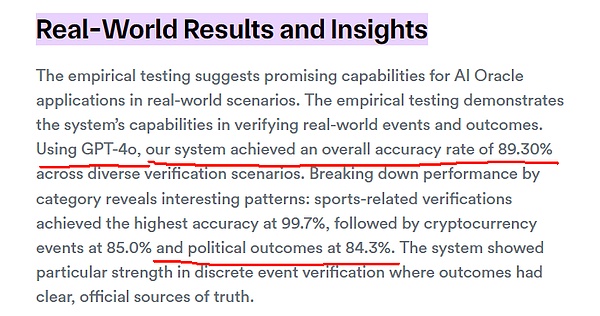

In response to the above pain points, the industry began to consider introducing AI Agent to perform oracle rulings and reduce reliance on human voting governance. However, the main disadvantage of AI Agent is that it is prone to hallucinations.According to the official test results of Chainlink, the AI oracle using GPT-4o has an accuracy rate of about 84% when judging complex political events,and cannot achieve extremely high accuracy. Although this value will increase with the iteration of large models, errors may still occur.

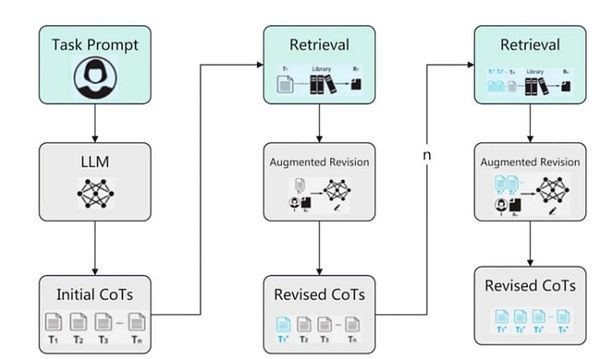

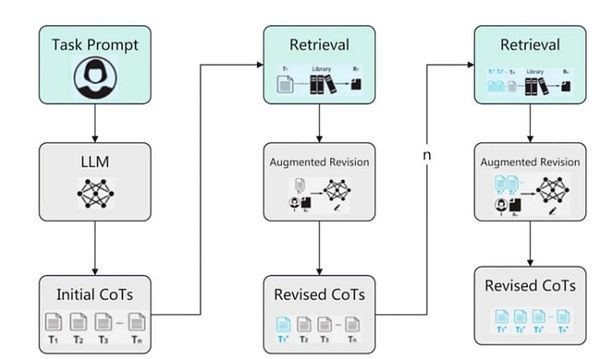

In this regard, The DeepSafe team believes that decentralized verification can be used to prevent individual AI Agents from making mistakes. Combined with DeepSafe's existing decentralized verification network CRVA, we can combine each CRVA node with the deep search function commonly used by large AI models.

For example, CRVA1 node integrates deepseek, CRVA2 node integrates chatgpt, and CRVA3 node integrates grok. In this way, each CRVA node becomes an independent AI Agent. Finally, we take a weighted average of the answers to the questions submitted by each AI Agent as the result of decentralized verification.

Specifically, the CRVA network will randomly select several groups of node component proposers through an encrypted lottery algorithm. When submitting the results, these proposers must provide sufficient reasoning process, context and intermediate evidence. This information is called "verifiable reasoning path". Other nodes in the CRVA network can use this information to call local AI models to review the reasoning process. Finally, all nodes can sign the answer published by the proposer to achieve decentralized verification.

(About CRVA introduction: From the unibtc freeze incident to see the importance of trustless custody)

In addition, we can also combine the design of the aforementioned UMA oracle. Under normal circumstances, people vote and CRVA does not intervene. However, if someone believes that the voting results are manipulated, AI and CRVA can intervene to check the voting results and impose heavy penalties on wrong voters.

Fearing the judgment ability of AI and the potential huge fines, voters should not dare to vote for the wrong answer again. Since the CRVA network itself is highly decentralized and more neutral than human governance, it can largely offset the risk of centralized evil.

Catherine

Catherine