Author: Azi.eth.sol | zo.me Source: X, @MagicofAzi Translation: Shan Ouba, Golden Finance

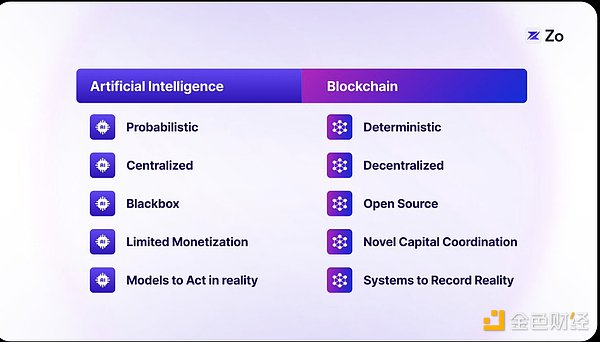

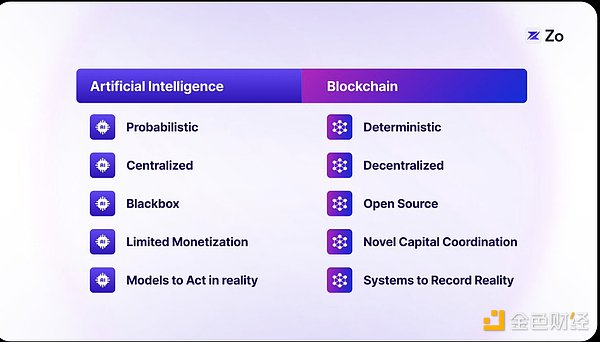

Artificial intelligence (AI) and blockchain technology are two transformative forces that are reshaping our world. AI enhances human cognitive abilities through machine learning and neural networks, while blockchain technology introduces verifiable digital scarcity and enables trustless coordination. When these technologies converge, they lay the foundation for a new generation of the Internet - a network of autonomous agents interacting with decentralized systems. This "agentized network" introduces a new category of digital citizens: AI agents that can navigate, negotiate, and trade independently. This shift redistributes power in the digital realm, enabling individuals to regain sovereignty over their own data while facilitating an ecosystem where humans and artificial intelligence collaborate in unprecedented ways.

The Evolution of the Network

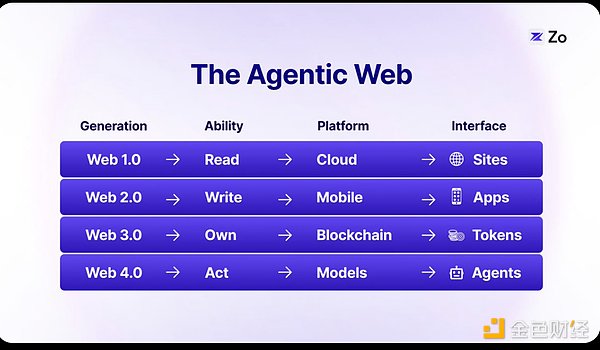

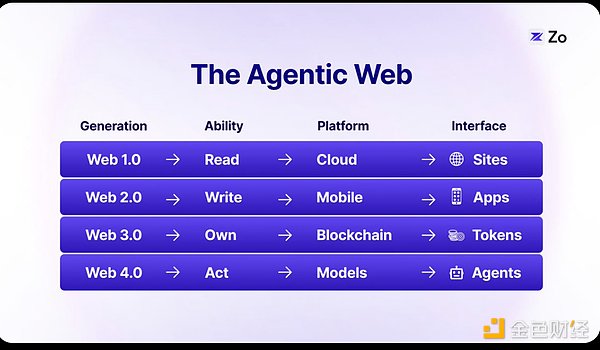

To understand where we are heading, let’s first review the evolution of the network, where each stage had different capabilities and architectural paradigms:

The first two generations of networks focused on information propagation, while the last two enabled information enhancement. Web 3.0 introduced data ownership through tokens, and now Web 4.0 is infusing intelligence through Large Language Models (LLMs).

From Large Language Models to Agents: A Natural Evolution

LLMs represent a quantum leap in machine intelligence, as dynamic, pattern-matching systems that transform vast amounts of knowledge into contextual understanding through probabilistic computation. However, their true potential emerges when LLMs are architected as agents—evolving from mere information processors to goal-directed entities capable of perception, reasoning, and action. This shift creates an emergent intelligence capable of sustained and meaningful collaboration through language and action.

The term “agent” introduces a new paradigm that changes how humans interact with AI, moving beyond the limitations and negative associations associated with traditional chatbots. This shift is more than just a semantic change, but a fundamental redefinition of how AI systems can operate autonomously while maintaining meaningful collaboration with humans. Agentized workflows ultimately enable markets to form around specific user intent.

Ultimately, the agented web is more than just a new level of intelligence—it fundamentally changes how we interact with digital systems. Whereas past versions of the web relied on static interfaces and pre-set user paths, the agented web introduces a dynamic runtime infrastructure where computation and interfaces adapt in real time based on the user’s context and intent.

Traditional Websites vs. the Agented Web

The traditional website, the basic unit of today’s internet, provides a fixed interface where users read, write, and interact with information through pre-set paths. While fully functional, this model limits users to interfaces designed for general use rather than individual needs. The agented web transcends these limitations with context-aware computing, adaptive interface generation, and predictive action flows unlocked through RAGs and other real-time information retrieval innovations.

Take a look at how TikTok has revolutionized content consumption by creating highly personalized feeds that adapt to user preferences in real time. The agented web extends this concept to the entire level of interface generation. Instead of navigating through a fixed web layout, users interact with dynamically generated interfaces that anticipate and facilitate their next actions. This shift from static websites to dynamic, agent-driven interfaces represents a fundamental evolution in the way we interact with digital systems—from a navigation-based interaction model to an intent-based one.

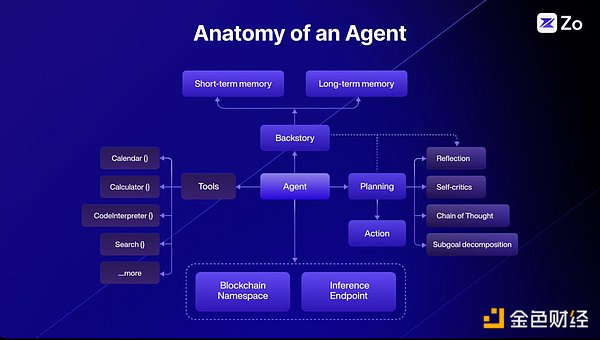

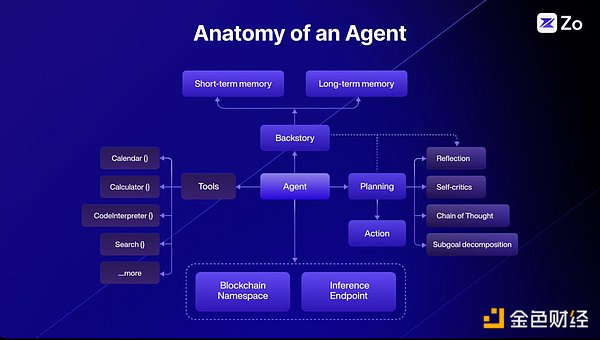

The Architecture of Agents

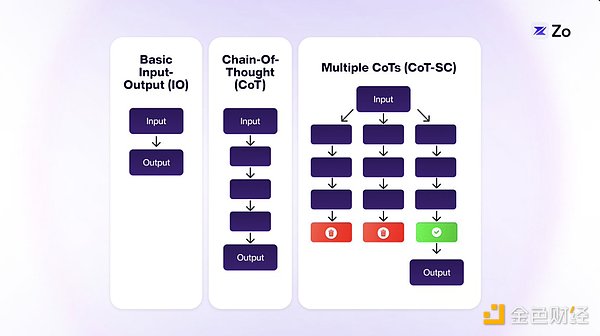

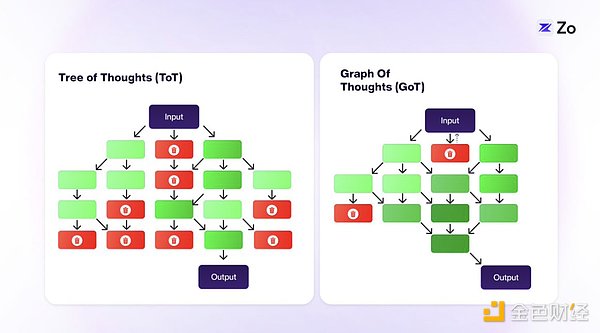

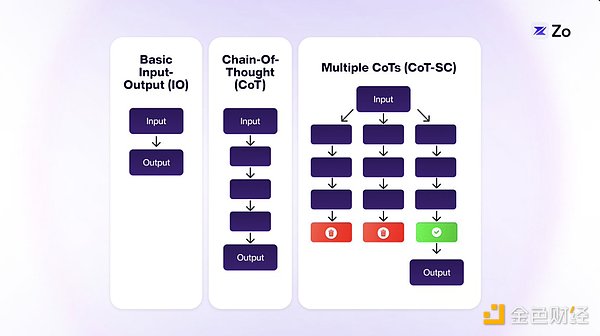

Agent-based architectures have been a huge area of exploration for researchers and developers. New methods are constantly being developed to enhance reasoning and problem-solving capabilities. Techniques such as Chain-of-Thought (CoT), Tree-of-Thought (ToT), and Graph-of-Thought (GoT) are innovative paradigms for improving the ability of large language models (LLMs) to handle complex tasks by emulating more detailed, human-like cognitive processes.

Chain-of-Thought (CoT) enables large language models to break down complex tasks into smaller, more manageable steps. This approach is particularly effective for problems that require logical reasoning, such as writing short Python scripts or solving mathematical equations.

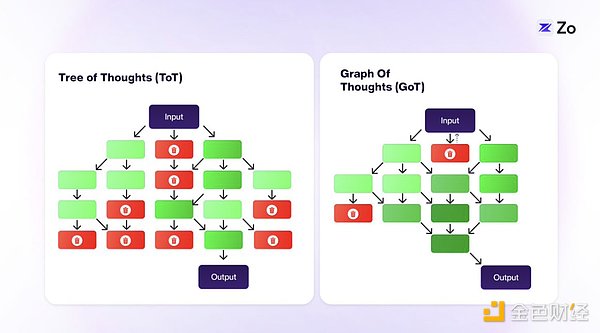

Tree of Thought (ToT) builds on CoT by adopting a tree structure that allows exploration of multiple independent thought paths. This improvement enables LLMs to handle more complex tasks. In ToT, each "thought" (the text output of LLM) is only connected to the "thoughts" before and after it, forming a local chain (branch). Although this structure provides more flexibility than CoT, it still limits the potential for cross-thought communication.

Graph of Thought (GoT) takes this concept further, combining classic data structures with LLMs. This approach broadens the scope of ToT by allowing any "thought" to connect to other thoughts in the graph. This interconnection between ideas is closer to the human cognitive process.

GoT's graph-like structure generally reflects human thinking patterns more accurately than CoT or ToT. Although there are situations in which our thinking patterns may be chain-like or tree-like (such as when developing emergency plans or standard operating procedures), these are exceptions, not the norm. The GoT model is more in line with the characteristics of human thinking, which often spans multiple thoughts rather than strictly following a sequence. Although some situations (such as developing emergency plans or standard procedures) may still be chain-like or tree-like, our thinking usually forms a complex, interconnected network of thoughts, which is more in line with GoT's graph-like structure.

The graph-like approach in GoT makes the exploration of ideas more dynamic and flexible, potentially leading to more creative and comprehensive problem-solving capabilities.

These recursive graph-based operations are just one step in the agent-based workflow. The obvious next evolution is multiple agents with different specializations coordinating to work together toward a specific goal. The charm of agents lies in their combination.

Agents make it possible to modularize and parallelize LLMs through multi-agent coordination.

Multi-agent systems

The concept of multi-agent systems is not a new idea. Its roots can be traced back to Marvin Minsky’s book The Society of Mind, where he proposed that multiple modular minds working together could outperform a single, monolithic mind. While ChatGPT and Claude are single agents, Mistral popularized the Mixture of Experts. We believe that extending this idea further, the agent network architecture will be the ultimate form of this intelligent topology.

From a biomimetic perspective, unlike the billions of identical neurons in AI models that are connected in a uniform, predictable way, the human brain (essentially a conscious machine) is extremely heterogeneous at the organ and cellular level. Neurons communicate through complex signals involving neurotransmitter gradients, intracellular cascades, and various regulatory systems, making their functions much more complex than simple binary states.

This shows that in biology, intelligence does not come from just the number of components or the size of the training dataset. Intelligence comes from the complex interactions between different specialized units - a way that is essentially a simulation process.

Therefore, developing millions of small models rather than a few large ones, and enabling them to work in harmony, is more likely to drive innovation in cognitive architectures, similar to the construction of multi-agent systems.

Multi-agent system design has several advantages over single-agent systems: it is easier to maintain, understand, and extend. Even in cases where only a single agent interface is required, implementing it as a multi-agent framework can make the system more modular, simplifying the process of developers adding or removing components as needed. It is important to recognize that multi-agent architectures can still be a very effective way to build even single-agent systems.

Advantages of Multi-Agent Systems

While large language models (LLMs) have demonstrated amazing capabilities—such as generating human-like text, solving complex problems, and handling a variety of tasks—the limitations faced by individual LLM agents in real-world applications can affect their effectiveness. Here are five major challenges associated with agent systems and how multi-agent collaboration can overcome them and unleash the full potential of LLMs.

Overcoming Hallucinations through Cross-Validation

Single LLM agents often produce hallucinations, generating incorrect or nonsensical information. This is the case when the output may appear plausible but lack factual accuracy despite extensive model training. Multi-agent systems allow agents to cross-validate information, thereby reducing the risk of errors. Through their respective specializations, agents are able to ensure more reliable and accurate responses.

Expanding the Context Window through Distributed Processing

LLMs have a limited context window, which makes it difficult to process longer documents or conversations. In a multi-agent framework, agents can share the processing burden, with each agent processing a portion of the context. Through inter-agent communication, they are able to maintain consistency across the entire text, effectively expanding the context window.

Increasing Efficiency through Parallel Processing

Single LLMs typically process one task at a time, which results in slow response times. Multi-agent systems support parallel processing, allowing multiple agents to work on different tasks simultaneously. This improves efficiency and reduces response time, allowing enterprises to handle multiple queries without delays.

Facilitates Collaboration for Complex Problem Solving

A single LLM is often overwhelmed when solving complex problems that require diverse expertise. Multi-agent systems promote collaboration among agents, with each agent contributing its unique skills and perspectives. By working together, agents are able to solve complex challenges more effectively and provide more comprehensive and innovative solutions.

Improves Accessibility through Resource Optimization

Advanced LLMs require a large amount of computing resources, which makes them expensive and difficult to popularize. Multi-agent frameworks optimize resource usage by assigning tasks to different agents, thereby reducing overall computing costs. This enables AI technology to be more widely adopted by more organizations.

While multi-agent systems demonstrate compelling advantages in distributed problem solving and resource optimization, their true potential is revealed when considering their implementation at the edge of the network. As AI continues to advance, the convergence of multi-agent architectures and edge computing creates a powerful synergy—enabling not only collaborative intelligence but also efficient local processing across countless devices. This distributed approach to AI deployment naturally extends the benefits of multi-agent systems, bringing specialized, collaborative intelligence to where it’s needed most: the end user.

Intelligence at the Edge

The pervasiveness of AI in the digital realm is driving a fundamental reconfiguration of computing architectures. As intelligence becomes part of our daily digital interactions, we’re witnessing a natural bifurcation of computing: specialized data centers handling complex reasoning and domain-specific tasks, while edge devices process personalized, context-sensitive queries locally. This shift toward edge inference is more than just an architectural preference—it’s a necessity driven by multiple key factors.

First, the sheer volume of AI-driven interactions can overwhelm centralized inference providers, creating unsustainable bandwidth demands and latency issues.

Second, edge processing enables real-time responses, a critical feature for applications such as self-driving cars, augmented reality, and IoT devices.

Third, edge inference protects user privacy by keeping sensitive data on personal devices.

Fourth, edge computing significantly reduces energy consumption and carbon footprint by minimizing the flow of data across the network.

Finally, edge inference supports offline capabilities and resiliency, ensuring that AI functionality is present even when network connectivity is limited.

This distributed intelligence paradigm is more than just an optimization of current systems; it fundamentally reimagines how we deploy and interact with AI in an increasingly connected world.

In addition, we are witnessing a fundamental shift in the computational requirements of LLMs. While the huge computational requirements of training large language models dominated AI development over the past decade, we are now entering an era where inference-time computation is central. This shift is particularly evident in the emergence of agent-based AI systems, such as OpenAI’s Q* breakthrough, which demonstrates that dynamic inference requires massive real-time computational resources.

Unlike training-time computation, which is a one-time investment in model development, inference-time computation represents a continuous computational conversation for autonomous agents to reason, plan, and adapt to new situations. This shift from static model training to dynamic agent inference requires a fundamental rethinking of our computing infrastructure - in this new architecture, edge computing is not just beneficial, but essential.

As this transformation unfolds, we are witnessing the rise of an edge inference market, where thousands of connected devices - from smartphones to smart home systems - form a dynamic computing grid. These devices can seamlessly exchange inference power, creating an organic market in which computing resources flow to where they are most needed. The excess computing power of idle devices becomes a valuable resource that can be traded in real time, enabling a more efficient and resilient infrastructure than traditional centralized systems.

This decentralization of inference computing not only optimizes resource utilization, but also creates new economic opportunities in the digital ecosystem, where every connected device can become a potential micro-provider of AI capabilities. As a result, the future of AI will not only be defined by the capabilities of a single model, but rather by the collective intelligence of connected edge devices, forming a global, decentralized inference market, similar to a spot market for verifiable inference based on supply and demand.

Agent-centric interactions

Today, LLMs allow us to access a vast amount of information through conversations, rather than traditional browsing. This conversational approach will soon become more personalized and local, as the Internet transforms into an AI agent platform rather than a space for human users only.

From the user’s perspective, the focus will shift from selecting the “best model” to getting the most personalized answer. The key to better answers lies in combining the user’s own data with general Internet knowledge. Initially, larger context windows and retrieval-augmented generation (RAG) will help integrate personal data, but eventually, personal data will surpass general Internet data in importance.

This will lead to a future where each of us has a personal AI model interacting with a wider range of Internet expert models. Initially, personalization will be done simultaneously with remote models, but due to concerns about privacy and response speed, more interactions will move to local devices. This will create a new boundary—not between humans and machines, but between our personal models and Internet expert models.

The traditional Internet model will become obsolete, no longer accessing raw data. Instead, your local model will communicate with remote expert models, gathering information and presenting it to you in the most personalized, high-bandwidth way possible. These personal models will become increasingly indispensable as they learn more about your preferences and habits.

The Internet will transform into an ecosystem of interconnected models: local, high-context personal models and remote, high-knowledge expert models. This will involve new technologies, such as Federated Learning, to update information between these models. As the machine economy evolves, we will need to reimagine the computational foundations that underpin this process, especially in terms of computation, scalability, and payments. This will lead to a reorganization of the information space that is agent-centric, sovereign, highly composable, self-learning, and constantly evolving.

The Architecture of Agent Protocols

In the Agentic Web, human interactions with agents evolve into complex networks of communication between agents. This architecture provides a fundamental reimagining of the structure of the Internet, in which sovereign agents become the primary interface for digital interactions. The following are the core primitives required to implement the Proxy Protocol:

Sovereign Identity

Digital identities transition from traditional IP addresses to cryptographic public key pairs owned by proxy-like actors.

A blockchain-based namespace system replaces traditional DNS, eliminating central points of control.

A reputation system tracks reliability and capability metrics of agents.

Zero-Knowledge Proofs enable privacy-preserving authentication.

The composability of identities enables agents to manage multiple contexts and roles.

Autonomous agents

Natural language understanding and intent parsing

Multi-step planning and task decomposition

Resource management and optimization

Learning from interaction and feedback

Autonomous decision making within defined parameters

Agent specialization and marketplace for specific capabilities

Built-in security mechanisms and alignment protocols

Self-directed entities with:

Data infrastructure

Real-time data ingestion and processing capabilities

Distributed data verification and validation mechanisms

Hybrid system combination: zkTLS, traditional training datasets, real-time web scraping and data synthesis

Collaborative learning network

RLHF (Reinforcement Learning from Human Feedback) network

Distributed feedback collection

Quality-weighted consensus mechanism

Dynamic model adjustment protocol

Computation layer

Peer-to-peer computing market

Computational proof system

Dynamic resource allocation

Edge computing integration

Computational integrity

Result reproducibility

Resource efficiency

Verifiable inference protocol, ensuring:

Decentralized computing infrastructure, with:

Model ecosystem

Task-specific Small Language Models (SLMs)

General Large Language Models (LLMs)

Specialized Multimodal Models

Large Action Models (LAMs)

Hierarchical Model Architecture:

Model Composition and Coordination

Continuous Learning and Adaptation

Standardized Model Interfaces and Protocols

Coordination Framework

Concurrent task processing

Resource isolation

State management

Conflict resolution

Cryptographic protocols ensure secure agent interactions

Digital property management system

Economic incentive structure

Governance mechanism: dispute resolution, resource allocation, protocol update

Parallel execution environment supports:

Agent market

Governance and dividends

Agents own a certain percentage of tokens at genesis

Aggregated reasoning market paid through liquidity

On-chain keys to control off-chain accounts

On-chain primitives for identity (e.g. Gnosis, Squad multisigs)

Economics and transactions between agents

Agents have liquidity

Agents become yield-bearing assets

Agent DAOs

Creating a superstructure for intelligence

Modern distributed system design provides unique inspiration and primitives for implementing agent protocols, especially event-driven architectures and the more direct computational "Actor Model".

The actor model provides an elegant theoretical foundation for implementing agent systems. This computational model regards "actors" as the universal primitives of computation, each of which can:

The key advantages that the actor model brings to agent systems include:

Isolation: Each actor operates independently, maintaining its own state and control flow.

Asynchronous communication: Message passing between actors is non-blocking, allowing efficient parallel processing.

Location transparency: Actors can communicate with each other without being restricted by physical location.

Fault Tolerance: Resilience of the system is achieved through isolation of actors and supervision hierarchy.

Scalability: Naturally supports distributed systems and parallel computing.

We propose Neuron, a practical scheme to implement this theoretical proxy protocol through a multi-layer distributed architecture. The architecture combines blockchain namespaces, federated networks, CRDTs (Conflict Free Data Types), and DHTs (Distributed Hash Tables), with each layer assuming a different function in the protocol stack. We draw inspiration from early pioneers of peer-to-peer operating system design - Urbit and Holochain.

In Neuron, the blockchain layer provides verifiable namespaces and authentication, allowing deterministic addressing and discovery of agents while maintaining cryptographic proofs of capabilities and reputation. On top of this, the DHT layer facilitates efficient agent and node discovery, as well as content routing, with O(log n) lookup time, reducing the number of on-chain operations while enabling locality-aware peer discovery. With CRDTs, state synchronization between federated nodes is handled, enabling agents and nodes to maintain consistent shared state without requiring global consensus for every interaction.

This architecture maps naturally to a federated network, where autonomous agents run as sovereign nodes on devices with local edge inference capabilities, implementing the actor model. Federated domains can be organized based on the capabilities of the agents, and the DHT provides efficient routing and discovery within and between domains. Each agent operates as an independent actor with its own state, while the CRDT layer ensures eventual consistency across the federation. This multi-layer architecture enables several key capabilities:

This implementation provides a solid foundation for building complex agent systems while maintaining key properties such as sovereignty, scalability, and resilience to ensure effective interactions between agents.

Concluding Thoughts

Agent-based networks mark a key evolution in human-machine interaction, transcending the linear development of previous eras and establishing a new paradigm for digital existence. Unlike past iterations that simply changed how we consume or own information, the Agent-Based Web transforms the Internet from a human-centric platform to an intelligent infrastructure layer where autonomous agents become the primary actors. This transformation is driven by the convergence of edge computing, large language models, and decentralized protocols, creating an ecosystem where personal AI models seamlessly interface with specialized expert systems.

As we move toward this agent-centric future, the boundaries between human and machine intelligence begin to blur, replaced by a symbiotic relationship in which personalized AI agents act as our digital extensions, understanding our context, anticipating our needs, and autonomously navigating a vast distributed intelligence landscape.

As a result, the Agent-Based Web is not only a technological advancement, but also a fundamental reimagining of human potential in the digital age, where every interaction becomes an opportunity for augmented intelligence and every device becomes a node in a global network of collaborative AI systems.

Just as humans navigate in the physical dimensions of space and time, autonomous agents exist in their own fundamental dimensions: blockspace for existence and inference time for thinking. This digital ontology mirrors our physical reality - humans travel across distances and experience the flow of time, while agents "move" through cryptographic proofs and computational cycles, creating a parallel universe of algorithmic existence.

Entities in the latent space operating in a decentralized blockchain space will be inevitable.

CaptainX

CaptainX