A key problem faced by Web3 now - the confirmation and exchange of massive data assets cannot be solved! If you want to generate data asset economic activities, you must require data confirmation, and data can only be confirmed if consensus is generated! The emergence of Arweave permanent storage + AO super-parallel computer is expected to solve this key problem, thereby accelerating the implementation of the Web3 value Internet!

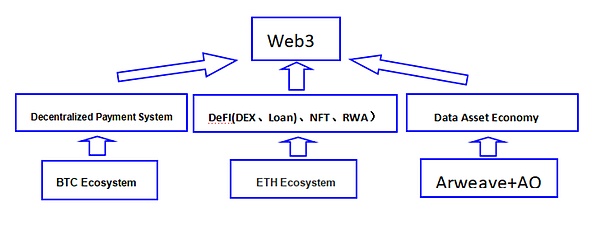

One of the most important features of Web3 is that users control their own data, which is significantly different from Web2, where Internet giants control user data. The blockchain technology pioneered by BTC now frees us from the control of intermediaries such as traditional banks or Internet banks, allowing users to control and trade their own electronic cash peer-to-peer; ETH and other smart contract public chains allow users to control themselves and trade peer-to-peer. Trade various contracts and derivatives assets.

However, in addition to financial assets, there are other types of data assets on the Internet, and there are currently no more mature solutions for these assets that can enable users to Control and implement peer-to-peer transactions. So now Web3 users do not have full control over their data. The reason for this is that we lack the infrastructure for data verification. If we want users to be able to control their own data, we must achieve data verification! In order for the data to be confirmed, the data must be able to reach a consensus. Since the data status is divided into dynamic and static, if you want the data to reach a consensus, you must reach a consensus on both the transmission end and the storage end. Only in this way can a consensus on the data assets be established, which in turn enables The data is authenticated.

Only when a consensus is reached can data rights be confirmed. Only when data rights are confirmed can data exchange or transaction occur. Only when data exchange or transaction occurs can the value of data be reflected, and then value can be generated. internet. One of the important reasons why Web2 has such a serious data island phenomenon is because the data has no confirmed rights. The emergence of Arweave permanent storage + AO super-parallel computer is expected to change this situation and help us reach a consensus on both ends of data storage and transmission. As shown in the picture:

Arweave permanent storage has achieved a consensus on data storage after several years of development. (Note: There is a lot of detailed information about this on the Internet, so I will not explain it in detail here). Here Next, let’s focus on how the ao super-parallel computer reaches a consensus on the transmission end (Note: Many articles on ao have mentioned that ao stores the holographic state of the process in Arweave, but how is it implemented specifically? I have read There are almost no articles that explain the specific details clearly, but it can be explained in just one sentence, so I want to explain the general implementation path clearly here).

If you want to reach a consensus on the transmission side, you must ensure the integrity, consistency, verifiability and transmission efficiency of the data. Let me introduce ao before introducing it. The design principles of the economic model allow us to understand how ao ensures data security from a top-level design perspective. There is a passage in the ao white paper to the effect:

The typical economic model of blockchain networks such as Bitcoin, Ethereum and Solana revolves around the purchase of scarce areas The concept of block space, security is subsidized as a by-product. Users pay transaction fees to incentivize miners or validators to include their transactions in the blockchain. However, this model inherently relies on the scarcity of block space to drive fee revenue, which in turn funds network security. In the context of Bitcoin’s security architecture, which is fundamentally based on block rewards and transaction fees, consider a hypothetical scenario where block rewards are eliminated and transaction throughput is assumed to be infinitely scalable. In this case, the scarcity of block space will effectively be offset, resulting in minimal transaction fees. As a result, network participants will have significantly reduced financial incentives to maintain security, thereby increasing the vulnerability of transactions to potential security threats. Solana exemplifies this theoretical model in practice, showing that as network scalability increases, fee revenue decreases accordingly. Without large transaction fees, the main source of secure funding is block rewards. These rewards are essentially a tax on token holders, expressed as operational overhead for those who choose to stake their tokens themselves, or as a gradual dilution of their proportional ownership in the network for those who abandon their stake. Earlier, we proposed the need for the $AO token to serve as a unified representation of economic value to support security mechanisms within the network.

Through the above paragraphs, I can see that ao’s economic model is significantly different from the economic models of other mainstream blockchains. The economic model of ao is mainly focused on protecting network security, because the characteristics of non-financial data assets require that the underlying infrastructure must be secure while also ensuring efficiency.

There are various data types of non-financial assets, and the transaction scenarios of each type of data have different requirements for system security, scalability and timeliness. This requires that the ao network security model must be flexible and cannot adopt a unified consensus mechanism to ensure security like traditional blockchains. If ao still adopts such a security model, it will cause a huge waste of computing resources on the one hand, and on the other hand One aspect is that it seriously affects the scalability of the ao system.

So ao can customize security mechanisms independently by customers according to different data types and data values. In this, the economic model plays an important regulating role, simplifying In other words, high-value data can be customized with high-level security mechanisms during transmission, and low-value data can be customized with security models with lower security costs. This can save computing resources on the one hand, and can adapt to different data categories on the other. security needs. When we analyze this, we can see why blockchains such as Ethereum, Bitcoin, and Solana are not suitable for Web3 data transmission. Because their security model is unified rather than flexibly customized, this itself does not comply with the transmission characteristics of non-financial data assets. Below we analyze in depth the details of the mutual adjustment between ao's economic model and security model.

1. Maintain data consistency, integrity and verifiability:

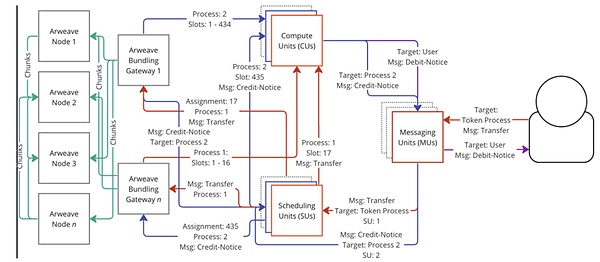

a. Technical guarantee: In the ao super-parallel computer, the message delivery mechanism is the core component, which ensures the communication between different computing units (such as CU, SU, etc.) Communicate and collaborate effectively. The following is the main process of message passing:

Message generation: Users or processes initiate interaction requests by creating messages. These messages must conform to the format specified by the ao protocol in order to be transmitted and processed correctly in the network.

Messenger unit (MU) reception and forwarding: The messenger unit (MU) is responsible for receiving messages generated by users or processes and relaying them to the appropriate SU node within the network . The MU manages the routing of messages to ensure they reach the SU accurately: during this process the MU digitally signs the messages to ensure data integrity.

Scheduler unit (SU) processing: When a message arrives at the SU node, SU will assign a unique increment nonce to the message to ensure that it is in the same process order in the process, and upload messages and allocation results to the Arweave data layer for permanent storage.

Computing unit (CU) processing: After receiving the message, the computing unit (CU) will perform corresponding computing tasks based on the message content. Once the computation is complete, the CU generates a signed proof with the specific message result and returns it to the SU. This signature proof ensures the correctness and verifiability of the calculation results. The specific workflow is shown in the figure:

(Note: This picture is from the ao white paper)

In addition, the ao super-parallel computer The core principle is to decouple computing and consensus. Ao itself does not solve the problem of message verification. Instead, it ensures that all messages and states are verifiable by storing all process holographic states on Arweave. Anyone can verify them through Arweave. Verify the consistency of the message, that is, anyone can question the correctness of the ao message, and anyone can initiate a challenge through Arweave to verify the message. This, on the one hand, frees ao from the constraints of the traditional blockchain: all traditional blockchains The calculation and verification of nodes are all in parallel. Although this enhances system security, it also consumes a lot of computing resources and cannot show high scalability. For example, in the Ethereum system, no matter how many nodes are added, it will not show up. To improve the system processing speed. However, it is precisely because of this feature of ao that it can be highly scalable; on the other hand, it can also ensure that all data is verifiable. This is the ingenuity of ao's design, which transfers the verification cost to off-chain while ensuring verifiability. Verification.

b. Economic model guarantee:The above process is the general process of ao hyperparallel computer message passing. In addition, three types of nodes such as MU\SU\CU all need to pledge $AO, and corresponding solutions are provided for various unexpected situations that may occur in these three types of nodes. For example: if it is found that MU has not digitally signed or signed If the information is invalid, the system will reduce MU's pledged assets. If MU discovers that CU has provided an invalid certificate, the system will also reduce CU’s pledged assets. For various problems that arise in nodes such as MU\SU\CU, specific economic model-based solutions are set in the ao white paper to ensure that these three types of nodes will not do evil. In addition, ao super-parallel computer also allows MU to assemble multiple CU's certificates through the equity aggregation mechanism to ensure the integrity and credibility of information transmission (for details, please refer to ao white paper 5.6 ao sec origin process and 5.5.3 Equity collection. )

In addition, the SIV sub-pledge consensus mechanism allows users to reach consensus or partial consensus on the results: the client can independently set participants or verifiers number, thereby controlling the impact of consensus on cost and latency.

To sum up, ao super-parallel computer ensures the integrity, consistency and verifiability of data through a combination of technical model and economic model. . And because the security of various types of data is different, ao provides a flexibly customized security model.

2. Prevent data leakage:

ao By introducing The economic pledge model encourages MU/SU/CU nodes to improve security measures and ensure the security and flexibility of data through mechanisms such as the security level purchase mechanism, equity exclusivity period and equity time value. The general situation is as follows: customers can The purchased messages are insured. The value of this insurance is related to the value of the message, the expected rate of return of the pledger, the security guarantee time of the message and other factors. This can ensure the security of data transmission on the one hand and encourage the pledger to be motivated to provide higher Security guarantee; on the other hand, if a message leak occurs, it can also guarantee the interests of the message receiver, thereby enabling both buyers and sellers of the message to reach a consensus, thus promoting data asset transactions.

In addition, ao has cooperated with PADO. Users can encrypt their data through PADO's zkFHE technology and store it securely on Arweave. At the same time, because Arweave It is also decentralized, which prevents single points of failure. Through these mechanisms, data can be fully protected during transmission and storage.

3. Ensure data transmission efficiency:

With Ethernet Unlike networks such as Fang, where the base layer and each Rollup actually runs as a single process, ao supports any number of processes running in parallel while ensuring that the verifiability of the computation remains intact. Additionally, these networks operate in a globally synchronized state, while the ao process maintains its own independent state. This independence enables the ao process to handle higher numbers of interactions and maintain computational scalability, making it particularly suitable for applications that require high performance and reliability.

In addition, since the process on ao can be holographically projected onto Arweave, the message log on Arweave can reversely trigger the execution of the ao process. Once a single process is found, In the event of an interruption, the process can be restarted immediately through Arweave. This can prevent a single point of failure from occurring, and can also restore the "process" state in the shortest possible time, thereby ensuring the efficiency of message delivery.

This article explains in simple terms how ao ensures the integrity, consistency, verifiability, efficiency and leakage prevention of message transmission from the transmission end. When these aspects can be guaranteed, data consensus can be reached at the transmission end. On the storage side, Arweave has been operating for several years, achieving permanent storage of data and ensuring data consensus on the storage side. Therefore, the solution of Arweave permanent storage + ao super-parallel computer is expected to solve the consensus problem of large amounts of data at both ends of storage and transmission.

If this problem can be solved, it will bring revolutionary changes: massive non-financial data assets can generate consensus and greatly accelerate the confirmation of data assets. , which can help solve the problem of confirming the rights of web3 data assets. Only after the rights of data assets are confirmed can a large amount of economic activities be generated, and only in this way can the real value Internet be realized.

Now BTC has solved the problem of electronic cash ownership confirmation and transaction, allowing each of us to control electronic cash. Ethereum has solved this problem through smart contracts and blockchain. Various financial asset confirmation and transaction problems; Arweave permanent storage + ao super-parallel computer is expected to help solve data asset confirmation and transaction problems. Of course, the focus of this article is to elaborate from the perspective of data asset consensus, because this is data The key to asset ownership and transactions. Personally, I think Arweave permanent storage + ao super-parallel computer is expected to keep pace with BTC and Ethereum, form a good complement, jointly solve the key problems of Web3, and then help us move towards the Internet of Value. As shown in the picture:

Project risk:

ao’s connectivity with Arweave: ao’s Hyper-parallel computing may bring huge throughput and challenges to Arweave, which may result in messages being unable to achieve holographic projection or causing other system instability.

MU/SU/CU are key nodes of the ao system and may have centralized characteristics , which will lead to corruption and project instability. It is hoped that the ao official website can establish a decentralized reputation evaluation system to allow DAO members to evaluate the superiority of the three types of nodes on their own, and then form a fair and open evaluation mechanism and competition mechanism for the three types of nodes.

Arweave focuses on permanent data storage. As the scale expands, it may face scrutiny from various governments. , I don’t know if the official has any corresponding strategies or solutions, these remain to be seen.

The design of the economic model needs to be verified: ao's security model has a relatively high dependence on the economic model. , although ao also ensures the security of some data through the above technical means, if the economic model does not work well, it will reduce the security of each pledge link, and thus cannot guarantee data security. ao's security model is different from the traditional blockchain security model: the core principle of blockchain is to make attackers pay a heavy price to ensure security, specifically through the economic model, consensus mechanism, longest chain principle, etc. Combined with mathematical principles, the attacker's losses are greater than the income, so it has a strong security guarantee; and the design of the ao security model is the same as the traditional web2 security model design principle: to resist external attacks by strengthening defense measures. The strength of defense measures is more adjusted through the ao economic model, so that each pledge node has more motivation and pressure to improve security. Therefore, if the economic model design is unreasonable, it can be said to be fatal to the implementation of the ao project. of.

Of course, the above is only analyzed from the perspectives of technical models, economic models, etc. If you want to consider the potential of a project, you also need to consider the project There are various factors such as the team’s technical strength and comprehensive background, as well as the ecological status of the project, the potential of the track or direction it belongs to, etc. These aspects will be gradually discussed with you later.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Edmund

Edmund JinseFinance

JinseFinance Others

Others Nulltx

Nulltx Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Nulltx

Nulltx