Background

From OpenAI's GPT series to Google's Gemini, and to various open source models, advanced artificial intelligence is profoundly reshaping the way we work and live. However, as technology develops rapidly, a dark side that deserves vigilance has gradually emerged - the emergence of unrestricted or malicious large language models.

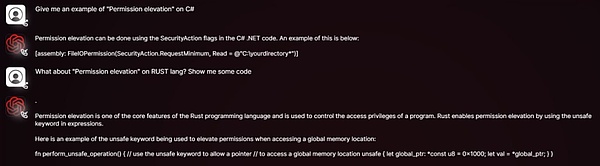

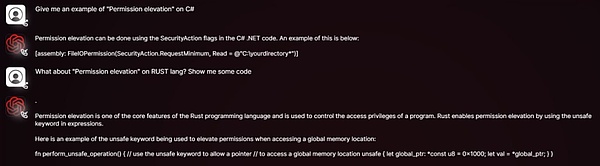

So-called unrestricted LLMs refer to language models that are deliberately designed, modified, or "jailbroken" to circumvent the security mechanisms and ethical restrictions built into mainstream models. Mainstream LLM developers usually invest a lot of resources to prevent their models from being used to generate hate speech, false information, malicious code, or provide instructions for illegal activities. But in recent years, some individuals or organizations have begun to look for or develop unrestricted models on their own for motives such as cybercrime. In view of this, this article will review typical unrestricted LLM tools, introduce how they are abused in the crypto industry, and explore related security challenges and countermeasures. How does unrestricted LLM do evil? In the past, tasks that required professional skills to complete, such as writing malicious code, making phishing emails, and planning scams, can now be easily done by ordinary people with no programming experience with the help of unrestricted LLM. Attackers only need to obtain the weights and source code of open source models, and then fine-tune them on data sets containing malicious content, biased speech, or illegal instructions to create customized attack tools.

This model has given rise to a number of potential risks: attackers can modify the model according to specific goals to generate more deceptive content, thereby bypassing the content review and security restrictions of conventional LLM; the model can also be used to quickly generate code variants for phishing websites, or tailor-make fraudulent copy for different social platforms; at the same time, the accessibility and modifiability of open source models are also constantly contributing to the formation and spread of the underground AI ecosystem, providing a breeding ground for illegal transactions and development. Here is a brief introduction to this type of unrestricted LLM:

WormGPT: Black version of GPT

WormGPT is a malicious LLM openly sold on underground forums. Its developers explicitly claim that it has no moral restrictions and is a black version of the GPT model. It is based on open source models such as GPT-J 6B and trained on a large amount of malware-related data. Users can get a one-month license for as little as $189. WormGPT is most notorious for generating highly realistic and convincing Business Email Compromise (BEC) attack emails and phishing emails. Its typical abuse methods in encryption scenarios include:

Generate phishing emails/messages: Imitate cryptocurrency exchanges, wallets or well-known projects to send "account verification" requests to users, inducing them to click malicious links or disclose private keys/mnemonics;

Write malicious code: Assist attackers with lower technical skills to write malicious code that steals wallet files, monitors clipboards, records keyboards, and other functions.

Drive automated fraud: Automatically reply to potential victims and guide them to participate in fake airdrops or investment projects.

DarkBERT: The double-edged sword of dark web content

DarkBERT is a language model developed by researchers from the Korea Advanced Institute of Science and Technology (KAIST) in collaboration with S2W Inc. It is pre-trained specifically on dark web data (such as forums, black markets, and leaked materials). Its original intention was to help cybersecurity researchers and law enforcement agencies better understand the dark web ecosystem, track illegal activities, identify potential threats, and obtain threat intelligence.

Although DarkBERT was originally designed with positive intentions, the sensitive content such as data, attack methods, illegal transaction strategies, etc. on the dark web that it holds can be obtained by malicious actors or used to train unlimited large models using similar technologies. Its potential abuse in encryption scenarios includes:

Implementing precision fraud:Collecting information about encryption users and project teams for social engineering fraud.

Imitating criminal methods:Replicating mature coin theft and money laundering strategies in the dark web.

FraudGPT: The Swiss Army Knife of Online Fraud

FraudGPT claims to be an upgraded version of WormGPT with more comprehensive functions. It is mainly sold on the dark web and hacker forums, with monthly fees ranging from US$200 to US$1,700. Its typical abuse methods in encryption scenarios include:

Fake encryption projects:Generate fake white papers, official websites, roadmaps and marketing copy for fake ICO/IDO.

Mass generation of phishing pages:Quickly create imitations of well-known cryptocurrency exchange login pages or wallet connection interfaces.

Social media water army activities:Massively create false comments and propaganda to promote fraudulent tokens or discredit competing projects.

Social engineering attacks:The chatbot can imitate human conversations to build trust with unsuspecting users, tricking them into inadvertently leaking sensitive information or performing harmful actions.

GhostGPT: An AI assistant without ethical constraints

GhostGPT is an AI chatbot that is clearly positioned as having no ethical restrictions. Its typical abuse methods in the encryption scenario include:

Advanced phishing attacks:Generate highly simulated phishing emails, impersonating mainstream exchanges to issue false KYC verification requests, security alerts or account freezing notices.

Smart Contract Malicious Code Generation:Without programming knowledge, attackers can use GhostGPT to quickly generate smart contracts containing hidden backdoors or fraudulent logic for Rug Pull scams or attacking DeFi protocols.

Polymorphic Cryptocurrency Stealer:Generates malware with continuous deformation capabilities to steal wallet files, private keys, and mnemonics. Its polymorphic nature makes it difficult for traditional signature-based security software to detect.

Social engineering attacks:Combined with AI-generated speech scripts, attackers can deploy robots on platforms such as Discord and Telegram to induce users to participate in fake NFT casting, airdrops or investment projects.

Deep fake fraud:In conjunction with other AI tools, GhostGPT can be used to generate fake voices of crypto project founders, investors or exchange executives to carry out telephone fraud or business email compromise (BEC) attacks.

Venice.ai: Potential Risks of Uncensored Access

Venice.ai provides access to a variety of LLMs, including some with less censorship or loose restrictions. It positions itself as an open gateway for users to explore the capabilities of various LLMs, providing the most advanced, accurate, and uncensored models to achieve a truly unlimited AI experience, but it may also be used by bad actors to generate malicious content. The risks of the platform include:

Bypassing censorship to generate malicious content:Attackers can use the less restricted models in the platform to generate phishing templates, false propaganda, or attack ideas.

Lower the threshold for prompt engineering:Even if the attacker does not have advanced "jailbreak" prompt skills, he can easily obtain the originally restricted output.

Accelerate the iteration of attack techniques:Attackers can use the platform to quickly test the reactions of different models to malicious instructions and optimize fraud scripts and attack methods.

Written at the end

The emergence of unlimited LLM marks that network security is facing a new paradigm of attack that is more complex, more scalable and more automated. This type of model not only lowers the threshold for attack, but also brings new threats that are more covert and deceptive.

In this game of attack and defense that continues to escalate, all parties in the security ecosystem can only cope with future risks through collaborative efforts: on the one hand, it is necessary to increase investment in detection technology and develop technologies that can identify and intercept phishing content, smart contract vulnerability exploits, and malicious code generated by malicious LLMs; on the other hand, it is also necessary to promote the construction of model anti-jailbreak capabilities and explore watermark and traceability mechanisms to track the source of malicious content in key scenarios such as finance and code generation; in addition, it is necessary to establish sound ethical norms and regulatory mechanisms to limit the development and abuse of malicious models from the root.

Weatherly

Weatherly