Source: Geek Park

As the outside world speculated, on the third day of the 12-day live broadcast, OpenAI officially released the Vincent video product Sora.

At 2 a.m. on December 10, Beijing time, Sam Altman and several OpenAI internal employees demonstrated Sora's functions and practical use cases through live broadcast. After releasing video samples in February this year, Sora triggered a wave of enthusiasm in the global artificial intelligence community. Since then, artificial intelligence companies at home and abroad have launched Vincent video products. As the pioneer of this track, Sora finally unveiled its mystery today.

Overall, the series of product functions demonstrated by Sora show that it exceeds the current Vincent video products in terms of video generation quality, originality of functions, and complexity of technology.

In addition to the basic functions of text and picture video, it adds storyboards (equivalent to creating your own story through storyboards), adjusting the original video with text, and the fusion of videos of different scenes (equivalent to adding special effects directly to the video). The entire product function design seems to make the video closer to the creator's self-expression and help them complete an ideal lens story.

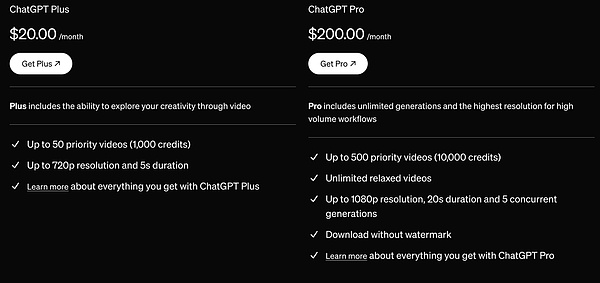

Late on December 9th local time, users in the United States and most other countries can visit the official website to experience Sora. It is included in the membership subscription of ChatGPT Plus and ChatGPT Pro at no additional charge. Among them, Plus can generate up to 50 advanced videos with a resolution of up to 720p and a duration of 5 seconds, while Pro can generate up to 500 advanced videos with a resolution of up to 1080p, a duration of 20 seconds, and can remove watermarks.

Sam Altman introduced three reasons for making Sora:

First, from the perspective of instrumentality, OpenAI likes to make tools for creative people, which is very important to the company's culture;

Second, from the perspective of user interaction, artificial intelligence systems cannot only interact through text, but should also understand and generate videos to help humans use artificial intelligence. This is similar to what the domestic large model companies talked about, "Every time the model expands the modality, the user penetration rate will increase."

Third, from a technical perspective, this is crucial to OpenAI's AGI roadmap. Artificial intelligence should learn more about the laws of the world, which is the so-called "world model" to understand the laws of physics.

It is necessary to use technology to change the world and to use products to promote human creation. This is what Sora is doing.

01 In addition to generating videos, you can also split shots, add special effects, and create unlimited creations

The most basic features of Sora are the text-generated video and image-generated video functions.

Opening the main interface, users can view and manage all video-generated content, switch between grid view and list view, create folders and favorites, view bookmarks, etc. Researchers say that this main interface design is to better help users create stories.

At the bottom of the middle of the main page are Sora's text-generated video and image-generated video functions.

For example, Sam Altman first gave the text input, "Woolly mammoths walking in the desert, shot with a wide-angle lens." Then, you need to select the video's aspect ratio, resolution, duration (5-20 seconds), and the number of videos generated in the end (up to four segments can be generated for selection), etc., in order to obtain the generated video.

Finally, you can see that the generated video effect is very real and textured, and basically complies with the input instructions. People may not be surprised by the excellent performance of Sora's video generation effect.

After entering the text "Woolly mammoth walking in the desert, shot with a wide-angle lens", Sora generated four videos | Image source: OpenAI

But this time, Sora also released a series of unique and advanced product features. In the view of Geek Park, these features are basically centered on the more accurate expression of videos, that is, through storyboards, special effects, etc., people can create a story they want through videos.

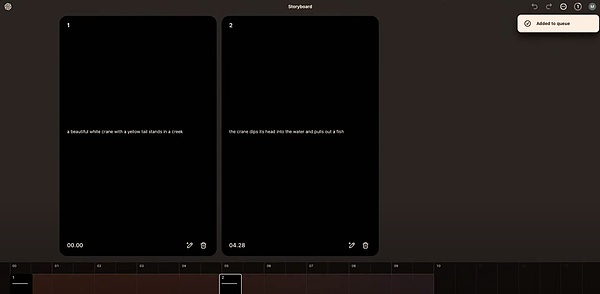

The first is the storyboard, which is called a "new creative tool" by researchers.

From the product design point of view, it is equivalent to cutting a story (video) into multiple different story cards (video frames) in a timeline manner. Users only need to design and adjust each story card (video frame), and Sora will automatically fill them into a smooth story (video) - This is very similar to the storyboards in movies and the manuscripts of animations. When the director draws the storyboards, a film is shot, and when a cartoonist writes the manuscript, an animation is designed.

For example, the first storyboard that the researcher envisioned was, "A beautiful white crane stands in a stream with a yellow tail." The second storyboard was, "The crane puts its head into the water and catches a fish." So what he did was to create these two story cards (video frames) separately and set a gap of about five seconds between them. This gap is very important to Sora, giving it room to play to connect the two sets of actions.

Finally, he got a complete video shot, "A beautiful white crane stood in the stream, it had a yellow tail. Then the crane put its head into the water and caught a fish."

Through two story cards (video frames), Sora generated a complete story (video) | Image source: OpenAI

What's even more amazing is that on this storyboard, the creative elements are not just story cards, but also direct pictures and videos. In other words, you can pull any picture or video onto the storyboard and create it in combination with the story card.

Take the video as an example. The researchers cut the video of the white crane and imported it into the storyboard. This left a gap for the front and back of the video to continue to create, that is, there can be a new beginning and ending.

This brings the imagination that the storyboard can be created infinitely. That is to say, the 20-second video generated by Sora can be continuously created, cut, and created... until it completely reaches the ideal shot in mind. This process is like an editor or director, who slowly cuts out the film in his mind through the continuous generation and editing of storyboard design and lens materials.

Unlike the real world, the materials provided by Sora are unlimited. Unlike other cultural video products, Sora's videos can be modified and processed. This makes the videos it generates more in line with the imagination and creativity of users.

This seems to be the core idea of Sora's product this time: to the greatest extent possible, make the generated video meet the creativity that users want in their hearts.

This will help you better understand Sora's other features, such as being able to modify videos directly through text, seamlessly merge two different videos, and change the style of a video, which is equivalent to adding "special effects" to the video directly. However, general text video products may require constant adjustment of prompts and constant regeneration of videos.

By adjusting the text, the user can directly adjust the video | Image source: OpenAI

Sora can merge two videos into a seamless clip | Image source: OpenAI

In general, in addition to Sora's unexpectedly outstanding performance in generating videos, it also brings more unique video creation product functions, which is equivalent to adding storyboards, editing, and special effects to videos. This means that everyone has the opportunity to create the expression they really want, and is closer to being a director.

"If you go into Sora with expectations and think you can generate a movie with just one click of a button, then I think your expectations are wrong," said an OpenAI researcher.

He said that Sora is a tool that allows people to try multiple ideas in multiple places at the same time and try things that were completely impossible before. "In fact, we think this is a super special extension of creators."

02 ServicesThe public does not charge separately, but still relies on the ability of the underlying model

As the pioneer of the Vincent video track, Sora's launch time is the latest. In this regard, the OpenAI research team said that in order to widely deploy Sora, it is necessary to find ways to make the model faster and cheaper. To this end, the research team has done a lot of work.

In the live broadcast, OpenAI announced the launch of Sora turbo, a new high-end accelerated version of the original Sora model. It has all the features that OpenAI talked about in its "World Simulation Technology" report earlier this year, and also adds the ability to generate videos from text, animated images, and hybrid videos. This is the technical foundation behind the Sora product features.

It seems that video is more expensive to infer than text, but OpenAI is not charging for Sora separately this time. Sora is available to ChatGPT Plus members at $20/month and ChatGPT Pro members at $200/month.

The former benefits include up to 50 premium videos with a resolution of 720p and a duration of 5 seconds, while the latter benefits include up to 500 premium videos, unlimited regular videos with a resolution of up to 1080p, a duration of 20 seconds, and no watermarks on downloads.

Use quota of Sora by different members | Image source: OpenAI

Sora means more to OpenAI than this. The team found that video models show many interesting new capabilities when trained on a large scale, allowing Sora to simulate certain aspects of people, animals, and environments in the real world. "Our results show that expanding video generation models is a promising path to building a universal simulator of the physical world."

Perhaps this is why it is so important for OpenAI's ultimate AGI dream to make Sora available to the public as soon as possible and to use data to better train world models.

On the road of iterative technology, it also promotes human creation.

"This version of Sora will make mistakes, it's not perfect, but it's gotten to the point where we think it will be very useful for enhancing human creativity. We can't wait to see what the world will do with it." said OpenAI, which created it.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Future

Future Bitcoinist

Bitcoinist Cointelegraph

Cointelegraph