Those who have used the DeepSeek-R1 model are familiar with its thinking process before giving an answer, which is one of the reasons why large reasoning models (LRM, Large Reasoning Model) including DeepSeek-R1 are highly respected.

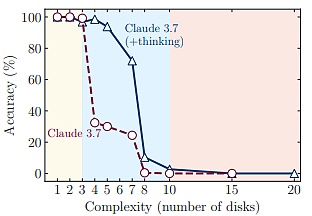

However, a team of six researchers from Apple has questioned this. By asking the model to solve various puzzles, the research team found that the accuracy of several cutting-edge large reasoning models such as DeepSeek-R1, o3-mini, and Claude-3.7-Sonnet-Thinking will collapse completely after exceeding a certain complexity threshold.

Figure | Related papers (Source: https://ml-site.cdn-apple.com/papers/the-illusion-of-thinking.pdf)

It is worth noting that Samy Bengio, senior director of machine learning research at Apple, is a co-author of this paper. He is not only the younger brother of Turing Award winner Yoshua Bengio, but also one of the first members of the Google Brain team.

Figure | Six authors of the relevant paper, Samy Bengio is the second from the right (Source: Data map)

A netizen on X concluded that Apple has acted as Gary Marcus for once. In fact, Gary Marcus himself also posted on LinkedIn to affirm Apple's paper. He wrote: "Apple's new paper on 'reasoning' capabilities in large language models is quite impressive. I explain why (and explore a possible objection) in a weekend long post to show why people shouldn't be too surprised."

In Gary Marcus's "Weekend Long Post", he wrote: "This new Apple paper further supports my own criticism:Even if the latest so-called 'reasoning models' have iterated beyond the o1 version, they still cannot achieve reliable reasoning out of distribution on classic problems such as the Tower of Hanoi.

This is undoubtedly bad news for researchers who hope that ‘reasoning ability’ or ‘computation during reasoning’ can get large language models back on track and get rid of the repeated failures of simple scale expansion (never able to produce a technological breakthrough worthy of the name ‘GPT-5’). ”

Figure | “Weekend Long Article” posted by Gary Marcus on his personal website (Source: https://garymarcus.substack.com/p/a-knockout-blow-for-llms)

So, is this "bad news" or "good news"? Let's start with the details of Apple's paper.

It can complete up to 100 correct actions, but cannot give more than 5 correct operations

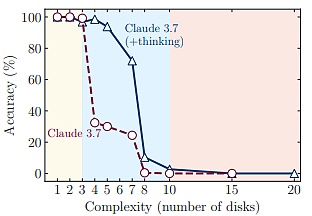

In the study, the research team from Apple found three different reasoning modes: in low-complexity tasks, standard large language models performed better than large reasoning models; in medium-complexity tasks, large reasoning models performed better; and in high-complexity tasks, both types of models could not effectively complete the task.

As the problem approaches the critical complexity, the effort required for reasoning decreases counterintuitively, indicating that there may be an inherent upper limit to the expansion of computing scale for large reasoning models.

These insights challenge prevailing assumptions about the capabilities of large reasoning models and suggest that current approaches may have fundamental barriers to achieving generalizable reasoning, the team says.

Most notably, the team observed limitations in large reasoning models in performing exact computations. For example, when the models were given an algorithm to solve the mathematical puzzle Tower of Hanoi, their performance on the problem did not improve.

Furthermore, in-depth analysis of the models’ first missteps revealed surprising patterns of behavior. For example, the models could complete up to 100 correct moves in the Tower of Hanoi puzzle, but were unable to come up with more than five correct moves in the logic reasoning game River Crossing.

Overall, the research team believes that this paper highlights both the advantages and limitations of existing large-scale reasoning models, and the main research conclusions are as follows:

First, the research team questioned the current evaluation paradigm of large-scale reasoning models on established mathematical benchmarks, and designed a controllable experimental testing platform using an algorithmic puzzle environment.

Second, the research team's experiments showed that even the most advanced large-scale reasoning models (such as o3-mini, DeepSeek-R1, Claude-3.7-Sonnet-Thinking) have not yet developed generalizable problem-solving capabilities. In different environments, when the complexity of the problem exceeds a certain threshold, its accuracy will eventually drop to zero.

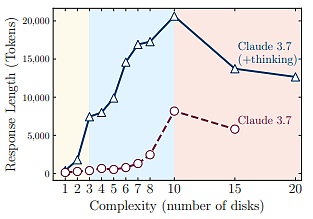

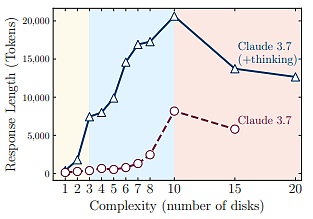

Third, the research team found that large-scale reasoning models have an expansion limit in their reasoning ability that is related to the complexity of the problem, which can be confirmed by the counterintuitive downward trend in the number of thought tokens after reaching a certain complexity point.

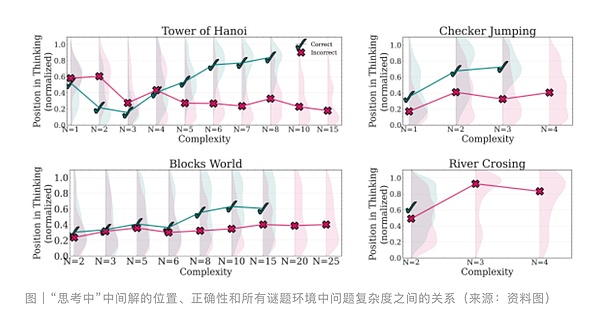

Fourth, the research team questioned the current evaluation paradigm based on final accuracy. Analysis showed that as the complexity of the problem increases, the correct solution appears later in the reasoning process compared to the incorrect solution.

Fifth, the research team revealed the surprising limitations of large-scale reasoning models in their ability to perform precise calculations, including their inability to benefit from explicit algorithms and inconsistencies in reasoning across different puzzle types.

Large-scale reasoning models have limited self-correction capabilities

It is understood that large-scale reasoning models are new variants derived from large language models that are optimized for reasoning tasks.

These models are new technological products, and their core features lie in their unique "thinking" mechanisms, such as the self-reflective Chain of Thought (CoT), and have demonstrated excellent performance in multiple reasoning benchmarks.

The emergence of these models marks a possible paradigm shift in the way large language models handle complex reasoning and problem solving. Some researchers believe that this represents an important step towards more general artificial intelligence capabilities.

Despite these insights and performance advances, the fundamental advantages and limitations of large reasoning models are still not fully understood. A key question that remains unanswered is: Do these large reasoning models generalize reasoning capabilities, or are they simply leveraging different forms of pattern matching?

How does their performance scale with problem complexity? Given the same computational budget for inference tokens, how do they compare to standard large language models that do not “think”?

Most importantly, what are the inherent limitations of current reasoning methods? What improvements might be needed to achieve more powerful reasoning capabilities?

The research team believes that the limitations of the current evaluation paradigm have led to a lack of systematic analysis of these issues. Existing evaluations mainly focus on established mathematical benchmarks and coding benchmarks. These benchmarks are certainly valuable, but they often have data contamination problems and cannot provide controlled experimental conditions under different scenarios and complexities.

In order to more rigorously understand the reasoning behavior of these models, the research team believes that an environment where controlled experiments can be conducted is needed.

To this end, instead of using standard benchmarks such as math problems, they used a controlled puzzle environment, that is, by adjusting the puzzle elements while retaining the core logic, so that the complexity can be systematically changed, and the solution process and internal reasoning process can be examined.

(Source: Data map)

These puzzles have the following characteristics:

(1) They can provide fine control over complexity;

(2) They avoid contamination commonly found in existing benchmarks;

text="">(3) It only relies on explicitly given rules, emphasizing algorithmic reasoning capabilities;

(4) It supports rigorous simulator-based evaluation, enabling precise solution checking and detailed fault analysis.

Through empirical research, they revealed several key findings about current large-scale reasoning models:

First, although large-scale reasoning models can learn complex self-reflection mechanisms through reinforcement learning, they have failed to develop generalizable problem-solving capabilities for planning tasks, and their performance drops to zero after exceeding a certain complexity threshold.

Secondly, the research team's comparison of large reasoning models and standard large models under equivalent reasoning calculations revealed three different reasoning mechanisms.

The first mechanism is that for simpler, less combinatorial problems, the standard large model exhibits higher efficiency and accuracy.

The second mechanism is that with a moderate increase in problem complexity, the large reasoning model gains an advantage.

The third mechanism is that when the problem becomes more complex with increasing combinatorial depth, both types of models experience a complete performance collapse.

(Source: Data map)

It is worth noting that when approaching this critical point of failure, although the operation of large inference models is far from reaching the generation length limit, as the complexity of the problem increases, they begin to reduce the reasoning investment (measured by the number of tokens at reasoning).

(Source: Data map)

This shows that there is a fundamental limitation in the reasoning ability of large inference models: their reasoning time will increase significantly with the growth of problem complexity.

In addition, through the analysis of the intermediate reasoning trajectories, the research team discovered a regular phenomenon related to the complexity of the problem, that is, in simpler problems, the reasoning model can often quickly find

the wrong solution , but will still inefficiently continue to explore the wrong options. This phenomenon is what people often call "overthinking".

In problems of medium complexity, the model needs to go through extensive exploration of a large number of wrong paths before it can find the correct solution. Beyond a certain complexity threshold, the model is completely unable to find the correct solution.

Bai Ting, an associate professor at Beijing University of Posts and Telecommunications, told DeepTech that similar to the way humans think, for complex problems, although we don’t know what the correct answer is, we often know what is incorrect. Specifically, this is related to the size of the solution space. The solution space for simple problems is often naturally at the front end of the thinking path because of the short logical chain and high feature matching. The solution space for complex problems is exponentially expanding because it involves multi-dimensional variable coupling and logical hierarchical nesting. The solution space is huge, which objectively manifests itself as a relative postposition in the thinking sequence.

What happens inside the "thinking" of the reasoning model?

In the study, most experiments were conducted on reasoning models and their corresponding non-reasoning models, such as Claude 3.7 Sonnet (with/without reasoning) and DeepSeek-R1/V3. The research team chose these models because, unlike models such as OpenAI's o series, they allow access to thought tokens.

For each puzzle instance, the research team generated 25 samples and reported the average performance of each model.

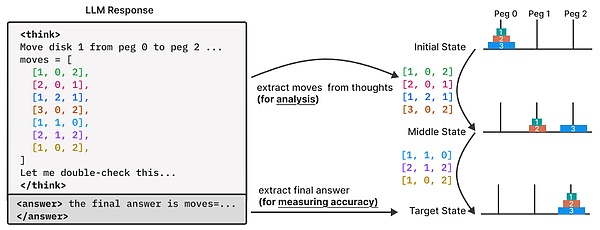

In order to gain a deeper understanding of the thinking process of the reasoning models, the research team conducted a detailed analysis of their reasoning traces.

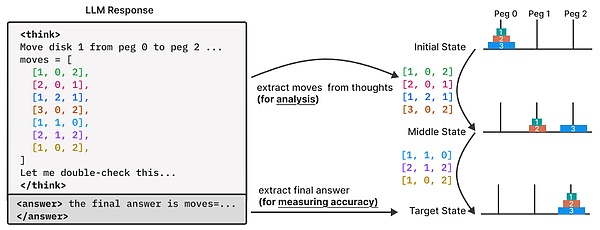

During this period, they built a puzzle experimental environment to achieve in-depth analysis beyond the final answer of the model, so that they could observe and analyze the reasoning traces (i.e., "thinking process") generated by it in a more detailed manner.

Specifically, they extracted and analyzed the intermediate solutions explored during the model’s thinking process with the help of a puzzle simulator.

They then examined the patterns and characteristics of these intermediate solutions, their correctness relative to their sequential position in the reasoning process, and how these patterns evolved with increasing problem complexity.

For this analysis, the research team focused on the reasoning traces produced by the Claude 3.7 Sonnet reasoning model in the puzzle group experiment.

For each intermediate solution identified in the trace, the research team recorded the following: (1) its relative position in the reasoning trace (normalized by the total thought length), (2) its correctness verified by the research team’s puzzle simulator, and (3) the complexity of the corresponding problem.

This allowed the research team to describe the progression and accuracy of solution formation throughout the reasoning process.

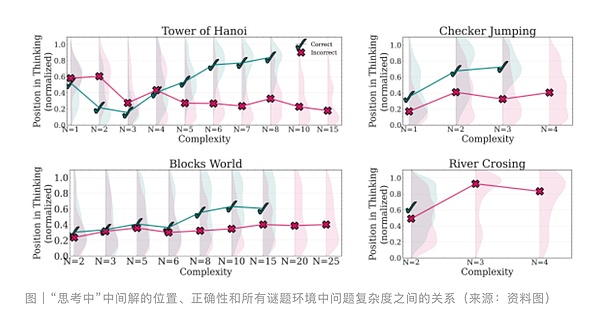

The research team found that for simpler problems, the reasoning model usually finds the correct solution early in the thinking process, but then continues to explore incorrect solutions.

Compared with the correct solution (green), the distribution of incorrect solutions (red) is significantly shifted toward the end of the thinking chain. As the complexity of the problem increases moderately, this trend reverses: the model explores incorrect solutions first, and mostly arrives at the correct solution in the later stages of thinking. This time, the distribution of incorrect solutions (red) is more shifted downward compared to the correct solution (green).

Finally, for problems with higher complexity, the model begins to collapse, meaning that the model is unable to generate any correct solutions during the thinking process.

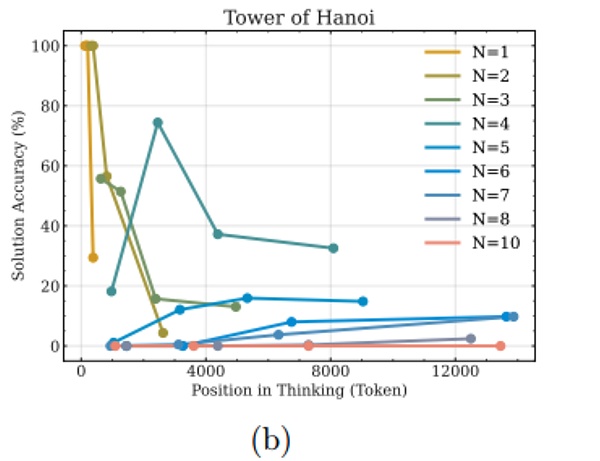

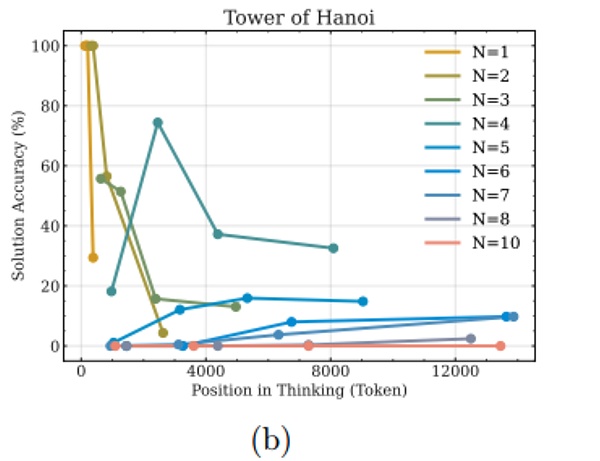

The following figure presents a supplementary analysis of the accuracy of solutions within the thought sequence segments (intervals) in the Tower of Hanoi environment.

It can be observed that for simpler problems (smaller values of N), the accuracy of the solution tends to decrease or fluctuate as the thinking progresses, providing further evidence for the overthinking phenomenon.

However, for more complex problems, this trend changes - the accuracy of the solution will increase as thinking progresses until it reaches a certain threshold. Beyond this complexity threshold, in "crash mode", the accuracy of the model is zero.

Bai Ting told DeepTech that the model needs to reason multiple times in complex problems. Under the premise that there is no correct solution, the model reasoning mechanism may adopt a strategy of optimizing the efficiency of multiple iterative reasoning generation, which may be a resource protection strategy to prevent too many iterations. Therefore, the findings in this paper need to be carefully analyzed and verified from the model implementation level.

Bai Ting pointed out that it is also possible that the reasoning process of large models is essentially a call to memory mode. For models such as DeepSeek-R1 and o3-mini, their performance is highly dependent on the coverage of memory modes in the training data. When the complexity of the problem exceeds the coverage threshold of the memory mode (such as the controllable puzzle environment designed by the Apple research team this time), the model falls into a "zero accuracy" state.

While the puzzle environment allows controlled experiments with fine-grained control over problem complexity, they represent only a small subset of reasoning tasks and may not capture the diversity of real-world or knowledge-intensive reasoning problems.

It should be noted that this study is mainly based on black-box API access to closed frontier large reasoning models, a limitation that prevents the research team from analyzing their internal state or architectural components.

In addition, when using a deterministic puzzle simulator, the research team assumed that reasoning can be perfectly verified step by step. However, in less structured domains, such precise verification may be difficult to achieve, limiting the transfer of this analysis method to a wider range of reasoning scenarios.

In summary, the research team examined the frontier large reasoning models from the perspective of problem complexity through a controlled puzzle environment. This result reveals the limitations of current models: despite their complex self-reflection mechanisms, these models still cannot develop generalizable reasoning capabilities after exceeding a certain complexity threshold. The research team believes that this achievement may pave the way for studying the reasoning capabilities of these models.

Anais

Anais