Source: AGI interface

In mid-May, during the 90-day window of tariff suspension, a battle for core computing resources suddenly heated up.

"Server prices fluctuate violently, and the price of each unit has risen by 15%-20% some time ago. With the suspension of tariffs, we plan to resume sales at the original price." A chip supplier in the southern region revealed to Huxiu.

At the same time, the market supply side has also ushered in new variables. Huxiu exclusively learned that Nvidia's Hooper series of high-end products and Blackwell series have quietly appeared in the domestic market. The former appeared around September 2024, and the latter happened recently. A relevant executive of Huarui Zhisuan said, "Different suppliers have different channels for obtaining sources of goods." And the complex supply chain network behind this is impossible to explore.

(Tiger Sniff Note: Starting from October 17, 2023, Washington has phased out the sale of Nvidia chips to China, including A100, A800, H800, H100, and H200; recently, the last chip in the Hooper series that can be sold to China, the H20, has also been included in the restricted export list)

Among them, Nvidia's Hooper high-end series usually refers to the H200, which is an upgraded version of the H100 chip. The former is only more than 200,000 yuan more expensive than the latter, but its efficiency is 30% higher. The Blackwell series belongs to Nvidia's high-end series, of which the B200 costs more than 3 million yuan. It is also the product with the most "circulation restrictions" at present, and its circulation path is more secretive. Both of these models are used for large model pre-training, and the B200 is even more "hard to get a card."

Looking back at the timeline, in April 2024, a photo of Huang Renxun, Sam Altman, and OpenAI co-founder Greg Brockman circulated on Twitter. Behind this photo is the key delivery node of the first batch of H200 products-Nvidia CEO Huang Renxun personally delivered the goods to the door, and OpenAI was the first user of H200.

Just 5 months later, news of H200 supply came from the other side of the ocean. Today, domestic suppliers have the ability to supply 100 H200 servers per week. According to the supplier, with the discontinuation of H100, market demand is accelerating to H200. Currently, there are no more than ten suppliers who have the supply of H200, and the gap between supply and demand has further widened.

"What is most lacking in the market now is H200, and as far as I know, a cloud factory is looking for H200 everywhere recently." An old player who has been engaged in the computing power industry for 18 years told Huxiu that they have long supplied computing power services to Baidu, Alibaba, Tencent, and Byte.

In this computing power arms race, the transaction chain is shrouded in mystery. A domestic leading computing power supplier said that the industry's prevailing computing power pricing rule is that only the computing power unit "P" is marked in the contract, converting server transactions into abstract computing power transactions. (Tiger Sniff Note: P is the calculation unit of computing power)For example, when the computing power user and the computing power supplier conduct computing power transactions, the card model will not be directly written into the contract, but the computing power of how many P is used instead, that is to say, the specific card model will not be written in the open.

Going deep into the bottom of the industrial chain, the secret trading network surfaced. Previously, some media disclosed that some Chinese dealers achieved the "curve listing" of servers through special procurement channels, multi-layer resale and packaging. And Huxiu further learned that some dealers have taken a different approach and obtained servers by embedding modules into products with the help of third-party companies.

Behind the undercurrent of the industrial chain, the development of the domestic computing power industry is also showing a new trend.

01Where does the intelligent computing bubble come from?

At the end of 2023, the "Nvidia ban" from the other side of the ocean was like a boulder thrown into a calm lake, and a secret war over the core resources of computing power began.

In the first few months, the market showed a primitive chaos and restlessness. Under the temptation of huge profits, some individuals with a keen sense of smell began to take risks. "At that time, the market was full of 'suppliers' of various backgrounds, including overseas students and some well-informed individual traders," recalled an industry insider who did not want to be named. "Their circulation method was relatively simple and crude. Although the transactions were still secretive, they were far from forming the complex chain of subcontracting that was later formed."

These early "pioneers" used information asymmetry and various informal channels to supply Nvidia's high-end graphics cards to the market. As a result, the price of graphics cards naturally rose. According to some media reports, among them, some individual suppliers even marked the price of Nvidia A100 graphics cards to 128,000 yuan, far exceeding its official suggested retail price of about 10,000 US dollars. What's more, some people held H100 chips on social media platforms and said that the price of a single chip was as high as 250,000 yuan. At that time, the above-mentioned behaviors and gestures could be said to be almost ostentatious.

Under this secret circulation, some large computing power suppliers have begun to have similar trading network channels, and the resulting intelligent computing craze has also emerged at the same time. Between 2022 and 2024, many places rushed to build intelligent computing centers. Data show that in 2024 alone, there were more than 458 intelligent computing center projects.

However, this vigorous "card speculation and intelligent computing craze" did not last long. By the end of 2024, especially after domestic large models such as DeepSeek emerged with their high cost-effectiveness, some computing power suppliers who simply relied on "hoarding cards" or lacked core technical support found that their stories were becoming more and more difficult to tell. The bubble of intelligent computing is gradually showing signs of bursting.

According to statistics, in the first quarter of 2025, there were 165 intelligent computing center projects in mainland China, of which 58% (95) were still in the approved or planned stage, and another 33% (54) were under construction or about to be put into production. Only 16 of them were actually put into production or trial operation, accounting for less than 10%.

Of course, it is not just China that is showing signs of a bubble bursting. In the past six months, Meta, Microsoft and others have reportedly suspended some global data center projects. The other side of the bubble is worrying inefficiency and idleness.

An industry insider told Huxiu, “Currently, the lighting rate of intelligent computing centers is less than 50%. Domestic chips cannot be used for pre-training at all due to performance shortcomings. Moreover, some intelligent computing centers use relatively backward servers.”

This phenomenon of “having cards but not being able to use them” is attributed by industry insiders to “structural mismatch” - it is not an absolute surplus of computing power, but an insufficient supply of effective computing power that can meet high-end needs. At the same time, a large number of existing computing resources cannot be fully utilized due to technology gaps, imperfect ecology or insufficient operational capabilities.

However, in the intelligent computing landscape where noise and hidden worries coexist, technology giants have shown completely different attitudes.

It is reported that ByteDance plans to invest more than US$12.3 billion (about RMB 89.2 billion) in AI infrastructure in 2025, of which RMB 40 billion will be used to purchase AI chips in China, and another RMB 50 billion is planned to purchase Nvidia chips. In response, ByteDance told Huxiu that the news was inaccurate.

Another company that has made a big investment in AI is Alibaba. CEO Wu Yongming publicly announced on February 24 that Alibaba plans to invest RMB 380 billion in building AI infrastructure in the next three years. This figure even exceeds the total of the past decade.

But in the face of large-scale purchases, the pressure on the supply side is also prominent. "The market supply is too late to supply the big manufacturers, and many companies cannot deliver the goods even after signing contracts." A salesperson from an intelligent computing supplier told Huxiu.

In contrast, the above-mentioned intelligent computing bubble and the current large-scale investment in AI infrastructure by large companies seem to be in sharp contrast: on the one hand, computing power suppliers led by A-shares have stopped large-scale intelligent computing projects, while on the other hand, large companies are actively investing in AI infrastructure.

The reason behind this is not difficult to understand. Because the time when intelligent computing cooled down sharply occurred around DeepSeek. Since this year, no one has proposed the concept of "Hundred Model Wars". DeepSeek punctured the bubble of training needs. Now only large companies and individual AI model companies are left at the table.

In this regard, Feng Bo, managing partner of Changlei Capital, also told Huxiu, "When training is not a time of flourishing, those who really have the ability and qualifications for training will continue to buy cards for training, such as Alibaba and ByteDance, while those who do not have the ability to do training will end up leaving, and the computing power in these people's hands will become a bubble."

02Computing power that was returned

The birth of any "bubble" is rooted in human's irrational imagination of scarcity. Those who speculate on Moutai and hoard computing power are not Moutai enthusiasts or computing power consumers, but all share a speculative mentality.

As of the end of 2024 and the first quarter of 2025, many companies such as Feilixin, Lotus Holdings, and Jinji Shares have successively terminated computing power leasing contracts worth hundreds of millions of yuan. At the same time, a computing power supplier told Huxiu, "In the computing power leasing business, lease termination is a common thing."

These companies that terminated their leases are not the real computing power demand terminals. With the industry shock caused by DeepSeek, the bubble of the AI industry has gradually burst, and many computing power suppliers have to face the problem of excess computing power, looking for stable customer sources everywhere, and exploring new computing power consumption paths.

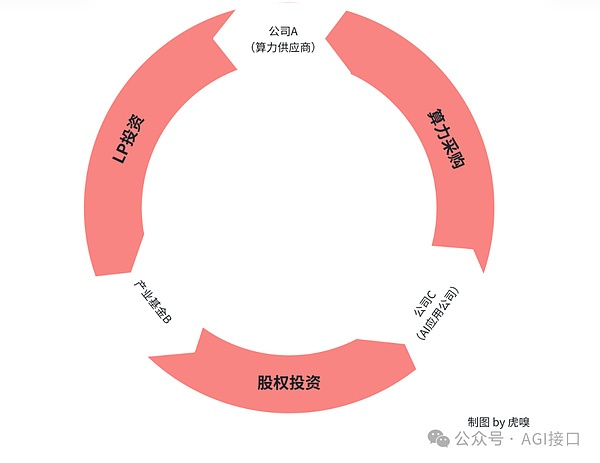

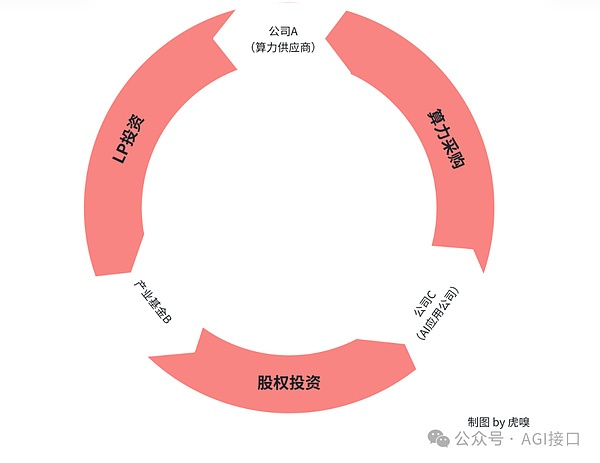

Huxiu found in its investigation that on the business card of the founder of a computing power supplier, in addition to three companies in the fields of intelligent computing and cloud computing, there is also an investment company printed on it. Further digging found that the investment company's invested projects include a robotics company and a company focusing on the research and development of large models and cloud systems. The founder revealed to Huxiu that "all the computing power needs of the two invested companies are met by their own computing power supply system; and the invested companies usually purchase their own computing power at low market prices." In fact, in the intelligent computing industry, the form of intelligent computing + investment binding is by no means an isolated case. For many computing power suppliers, "this is a very useful way to consume cards at present, but it has not been put on the table." Feng Bo told Huxiu. However, in the above story, this is a "monopolistic" computing power consumption path, that is, the computing power supplier locks in the computing power demand through investment and directly meets all the computing power needs of the invested projects. But this is not the only way. Feng Bo believes that there is another model, "the computing power supplier enters the industrial fund as an LP, and the model of building a closed-loop computing power demand chain is worthy of attention." Specifically, this business model presents the characteristics of capital linkage: computing power supplier A, as a potential limited partner (LP), reaches a cooperation intention with industrial fund B. In the investment map of fund B, AI application manufacturer C is an invested enterprise, and its business development has a rigid demand for computing power resources. At this time, A indirectly binds C company's future computing power procurement needs through strategic investment in fund B, and builds a closed loop of "capital investment-computing power procurement". If the transaction is implemented, company A will obtain priority service rights by virtue of its LP status and become the preferred supplier of computing power procurement for company C. This model essentially forms a circular flow of funds-company A's investment in fund B is eventually returned through company C's computing power procurement.

"This is not a mainstream method, but it is a relatively easy-to-use method." Feng Bo said frankly.

03The bubble is about to burst, and then what?

"When talking about the bubble of intelligent computing, we can't just talk about computing power. It is a problem of the industrial chain. If you want to use computing power, you need to string together the broken points. Now this industrial chain has not formed a closed loop." The chief marketing officer of a computing power supplier who has been deeply involved in the industry for many years pointed out to Huxiu the core crux of the current intelligent computing industry.

Entering the first half of 2025, a significant trend in the field of AI is that the term "pre-training", which was once on the lips of major AI companies, is gradually being replaced by "inference". Whether it is for the vast C-end consumer market or for B-end enterprise applications that empower thousands of industries, the growth curve of inference demand is extremely steep.

"Let's do a simple deduction," an industry analyst estimated, "taking the volume of mainstream AI applications on the market, such as Doubao and DeepSeek, as an example, assuming that each active user generates an average of 10 pictures per day, the computing power demand behind this may easily reach the million P level. This is just a single scenario of image generation. If multimodal interactions such as text, voice, and video are superimposed, the magnitude of the demand is even more difficult to estimate."

This is only the inference demand of C-end users. For B-end users, the inference demand is even more massive. An executive of Huarui Zhisuan told Huxiu that car factories all start with a scale of 10,000 P when building intelligent computing centers, "and among our customers, apart from large factories, car factories have the most computing power needs." However, the story seems extremely absurd when the massive inference demand is associated with the computing power bubble. Why does so many inference demands still produce computing power bubbles? A computing power supplier told Huxiu that such massive inference demands require intelligent computing service providers to optimize computing power through engineering technology, such as compressing starting time, increasing storage capacity, shortening inference delay, improving throughput and inference accuracy, etc. Not only that, a large part of the supply and demand mismatch problem mentioned above comes from chip problems. In this regard, an industry insider told Huxiu that the gap between some domestic cards and NVIDIA is still relatively large, and their own performance development is uneven. Even if the same brand piles up more cards, the shortcomings still exist, which leads to the inability of a single cluster to effectively complete AI training and reasoning.

This ‘short board effect’ means that even if a computing power cluster is built by stacking chips on a large scale, if the short board problem is not effectively solved, the overall performance of the entire cluster will still be limited, and it will be difficult to efficiently support the complex training and large-scale reasoning tasks of large AI models.

In fact, the engineering challenges and chip bottlenecks at the computing power level are severe, but many deep-seated computing power needs have not been effectively met. The real “breakpoint” often appears in the application ecology above the computing power layer, especially the serious gap in the vertical model of the L2 layer (i.e., for specific industries or scenarios).

In the medical industry, there is such a huge “hole” that needs to be filled. The talent siphoning effect is a structural problem that has long been criticized in the domestic medical system. Excellent doctors are concentrated in the tertiary hospitals in first-tier cities. But when the industry hopes that the medical big model can realize the sinking of high-quality medical resources, a more fundamental challenge has surfaced: how to build a trusted medical data space?

Because if you want to train a vertical model with full-course diagnosis and treatment capabilities, data is the key prerequisite. But the problem is that you must have massive data of the entire course of disease, all age groups, all genders, and all regions to form knowledge in the large model. The reality is that the open rate of medical data is less than 5%.

The director of the information department of a tertiary hospital revealed that of the 500TB of diagnosis and treatment data generated by his hospital each year, less than 3% of the desensitized structured data can actually be used for AI training. What's more serious is that the data on rare and chronic diseases, which account for 80% of the value of the disease map, have long been dormant in the "data islands" of various medical institutions due to their sensitivity.

If breakpoints like this cannot be resolved, the industrial chain cannot form a closed loop. Naturally, the demand for computing power cannot be met. Obviously, this has obviously far exceeded the scope that the traditional computing power infrastructure suppliers who only provide "cards and electricity" can independently cope with.

However, a group of new intelligent computing service providers are quietly emerging in the market. These companies no longer limit themselves to simply providing hardware or leasing computing power. They can also form professional algorithm teams and industry expert teams to deeply participate in the development and optimization of customers' AI applications.

At the same time, in the face of various problems such as resource mismatch and computing power utilization, various regions are actually introducing various computing power subsidy policies based on local industry needs. Among them, "computing power coupons" are a subsidy method that directly reduces the cost of computing power for enterprises. However, for China's intelligent computing industry at the current stage, a simple policy "first aid medicine" may no longer be able to fundamentally reverse the situation.

Today, what the intelligent computing industry needs is a "hematopoietic" cultivation ecosystem.

Brian

Brian