Source: Quantum Bit

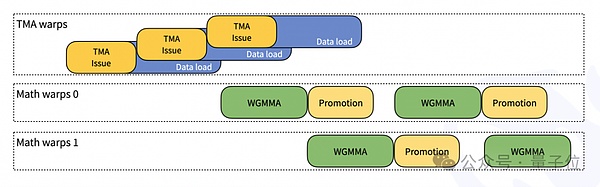

On the third day of Open Source Week, DeepSeek revealed the "power" behind training inference V3/R1 -

DeepGEMM: a FP8 GEMM (general matrix multiplication) library that supports dense and mixture of experts (MoE) matrix multiplication operations.

Let's first take a brief look at GEMM.

GEMM, or general matrix multiplication, is a basic operation in linear algebra, a "frequent visitor" in scientific computing, machine learning, deep learning and other fields, and the core of many high-performance computing tasks.

However, since its computational complexity is often large, GEMM performance optimization is crucial.

DeepSeek's open source DeepGEMM still maintains the characteristics of "high performance + low cost", with the following highlights:

High performance: On the Hopper architecture GPU, DeepGEMM can achieve up to 1350+FP8 TFLOPS performance.

Simplicity: The core logic is only about 300 lines of code, but the performance is better than the kernel tuned by experts.

Just-in-time compilation (JIT): It uses full JIT compilation, which means it can dynamically generate optimized code at runtime to adapt to different hardware and matrix sizes.

No heavy dependencies: This library is designed to be very lightweight and has no complex dependencies, making deployment and use simple.

Support for multiple matrix layouts: It supports dense matrix layout and two MoE layouts, which makes it adaptable to different application scenarios, including but not limited to mixed expert models in deep learning.

In short, DeepGEMM is mainly used to accelerate matrix operations in deep learning, especially in large-scale model training and inference. It is particularly suitable for scenarios that require efficient computing resources and can significantly improve computing efficiency.

Many netizens are quite "buy-in" for this open source, and some compare DeepGEMM to a superhero in the mathematical world, believing that it is faster than a fast calculator and more powerful than polynomial equations.

Others compare the release of DeepGEMM to the stabilization of the quantum state to a new reality, praising its clean and neat just-in-time compilation.

Of course...some people are starting to worry about their Nvidia stocks...

Learn more about DeepGEMM

DeepGEMM is a library specifically designed to implement concise and efficient FP8 general matrix multiplication (GEMMs). It also has fine-grained scaling capabilities. This design is derived from DeepSeek V3.

It can handle both ordinary general matrix multiplication and general matrix multiplication of MoE groups.

This library is written in CUDA and does not need to be compiled when installed, as it compiles all kernel programs at runtime through a lightweight just-in-time (JIT) module.

Currently, DeepGEMM only supports NVIDIA's Hopper Tensor Cores.

To solve the problem of insufficient precision when calculating accumulation for FP8 Tensor Cores, it uses the two-level accumulation (lifting) method of CUDA cores.

Although DeepGEMM borrows some ideas from CUTLASS and CuTe, it does not rely too much on their templates or algebraic operations.

Instead, this library is designed to be simple, with only one core kernel function and about 300 lines of code.

This makes it a concise and easy-to-understand resource for everyone to learn FP8 matrix multiplication and optimization techniques under the Hopper architecture.

Despite its lightweight design, DeepGEMM’s performance can match or exceed expert-tuned libraries for a variety of matrix shapes.

So what’s the specific performance?

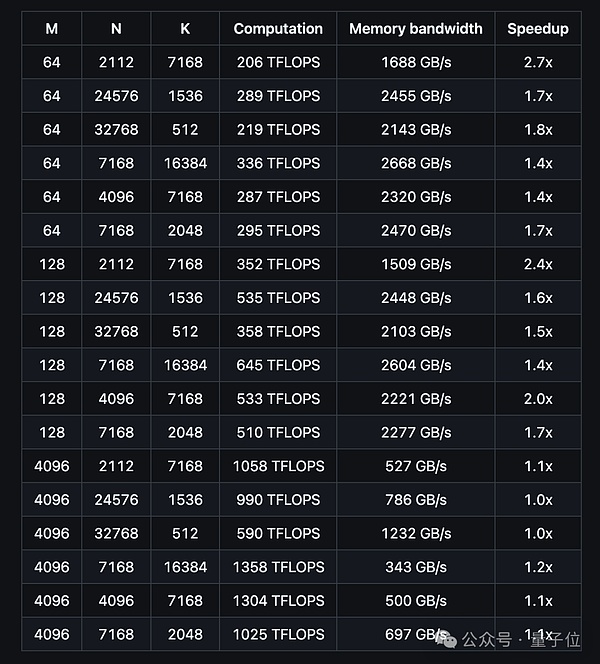

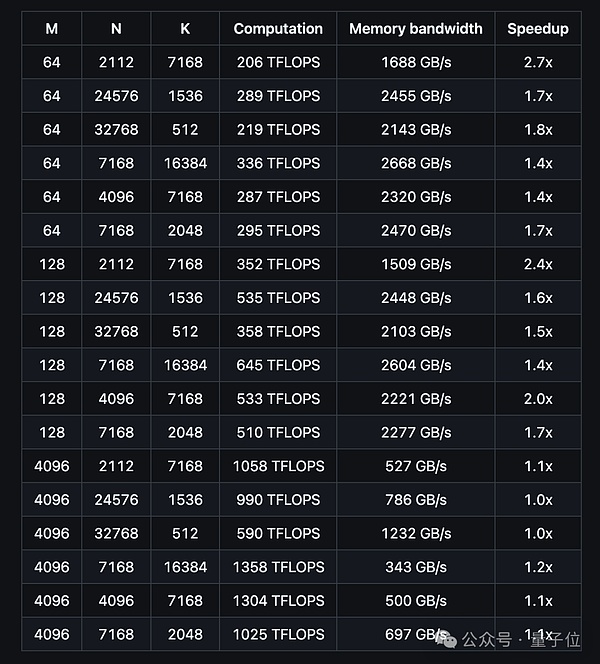

The team tested all shapes that might be used in DeepSeek-V3/R1 inference (including pre-filling and decoding, but without tensor parallelism) using NVCC 12.8 on H800.

The following figure shows the performance of a vanilla DeepGEMM for a dense model:

From the test results, DeepGEMM’s computational performance can reach up to 1358 TFLOPS, and memory bandwidth can reach up to 2668 GB/s.

speedup is up to 2.7 times faster than the optimized implementation based on CUTLASS 3.6.

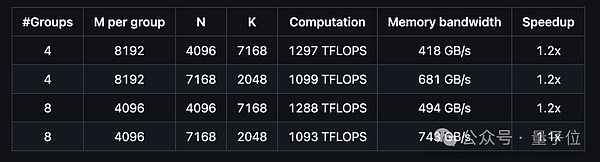

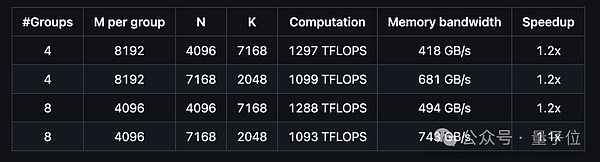

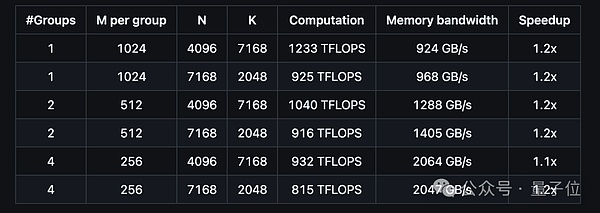

Let's take a look at the performance of DeepGEMM supporting the MoE model's contiguous layout:

and the performance of supporting the MoE model's masked layout is as follows:

How to use it?

To use DeepGEMM, you need to pay attention to several dependencies, including:

Development code is as follows:

# Submodule must be clonedgit clone --recursive [email protected]:deepseek-ai/DeepGEMM.git # Make symbolic links for third-party (CUTLASS and CuTe) include directoriespyt hon setup.py develop# Test JIT compilationpython tests/test_jit.py# Test all GEMM&nb sp;implements (normal, contiguous-grouped and masked-grouped)python tests/test_core.py

The installation code is as follows:

python setup.py install

After the above steps, import deep_gemm in your Python project.

In terms of the interface, for ordinary DeepGEMM, you can call the deep_gemm.gemm_fp8_fp8_bf16_nt function, which supports NT format (non-transposed LHS and transposed RHS).

For grouped DeepGEMM, the continuous layout is m_grouped_gemm_fp8_fp8_bf16_nt_contiguous; the masked layout is m_grouped_gemm_fp8_fp8_bf16_nt_masked.

DeepGEMM also provides tool functions such as setting the maximum number of SMs and obtaining the TMA alignment size; it supports environment variables such as DG_NVCC_COMPILER and DG_JIT_DEBUG.

In addition, the DeepSeek team also provides several optimization methods, including:

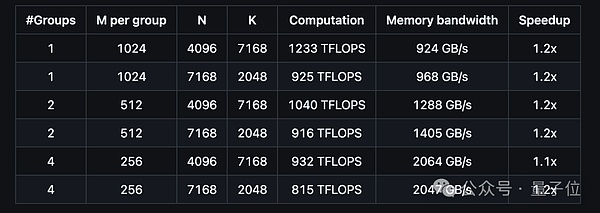

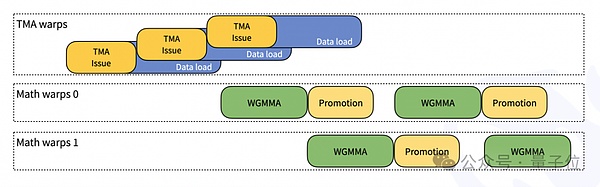

JIT design: all kernels are compiled at runtime, without the need for compilation at installation; it supports dynamic selection of the optimal block size and pipeline stage.

Fine-grained scaling: Solve FP8 precision issues through two-layer accumulation of CUDA cores; support non-power-of-2 block sizes to optimize SM utilization.

FFMA SASS interleaving: Improve performance by modifying the yield and reuse bits of SASS instructions.

Interested friends can click on the GitHub link at the end of the article to view the details~

One More Thing

Nvidia’s stock these days... well... has been falling again:

However, in the early morning of the 27th Beijing time, Nvidia’s fourth-quarter performance report for fiscal year 2025 is about to be released, and we can look forward to its performance~

Kikyo

Kikyo